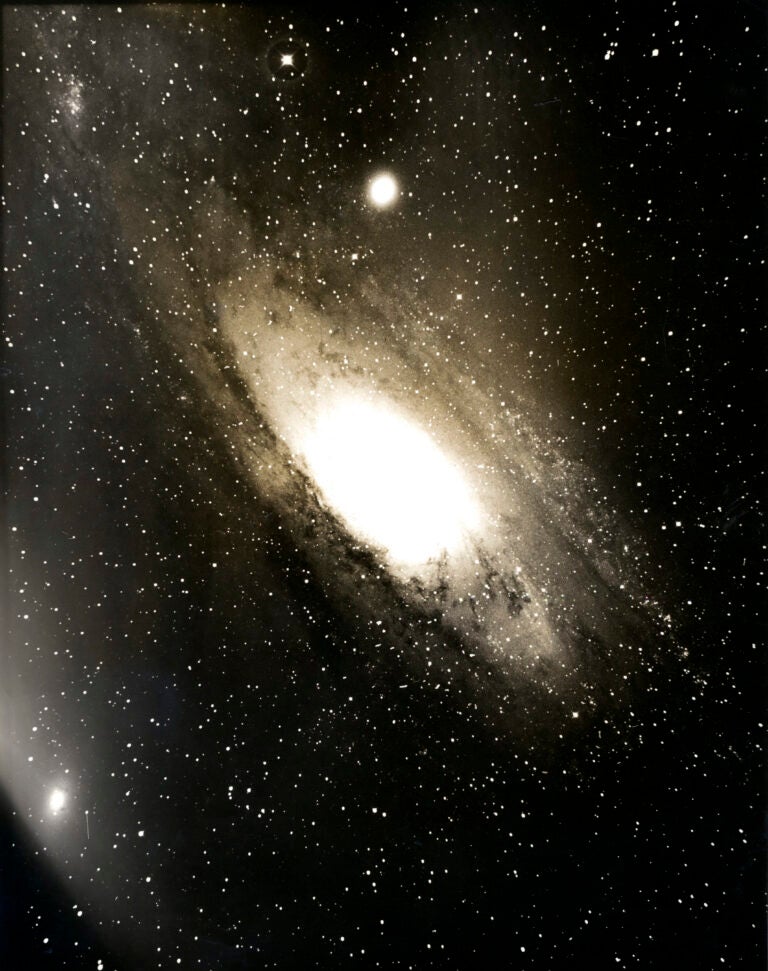

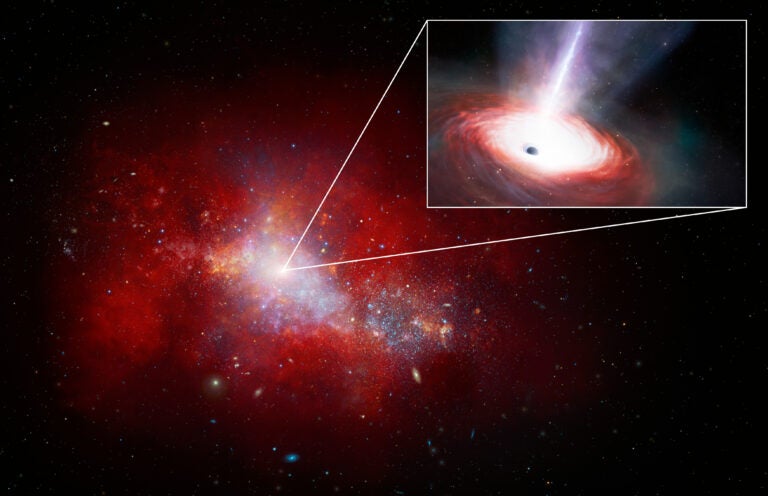

Spiral galaxy M106 harbors a water megamaser — amplified microwave emission from water molecules — near its massive central black hole. The maser provides an independent way to measure M106’s distance and thus helps calibrate the cosmic distance ladder, leading to more accurate values for the Hubble constant.

Something appears amiss in cosmology. A tension has arisen from attempts to measure the universe’s current expansion rate, known as the Hubble constant.

Large international teams have used two general methods to determine it. All the groups have been extremely diligent in their research and have cross-checked their results, and their measurements seem rock solid. But the practitioners of one approach can’t quite come to agreement with practitioners of the other.

The stakes are high. As Nobel laureate Adam Riess of the Space Telescope Science Institute and Johns Hopkins University explains, “The choices now are either a conspiracy of errors, not just in one measurement but in multiple measurements . . . or there’s some kind of interesting new physics in the universe.”

The Hubble Wars

Controversies surrounding the Hubble constant are hardly new to cosmology. This parameter, often called H-naught (and abbreviated H0), is fundamental to determining the age of the universe and its ultimate fate, giving astronomers a powerful incentive to get it right.

To measure H0 directly, astronomers need to observe many galaxies and glean two key pieces of information from each one: its distance and the speed at which it moves away from us. The latter comes directly from measuring how much the galaxy’s light has shifted toward the red. But determining distances proves to be much trickier.

From the 1960s to the 1980s, a team led by Allan Sandage of the Carnegie Observatories consistently measured values of H0 around 50 to 55 kilometers per second per megaparsec. (A megaparsec equals 3.26 million light-years.) A competing team led by Gérard de Vaucouleurs of the University of Texas obtained figures around 100. This discrepancy by a factor of two was so extreme that the scientific dispute degenerated into personal animosity.

Both teams used a traditional “distance ladder” approach to measure distances.

They monitored Cepheid variable stars in far-flung galaxies. The luminosities of these supergiant stars correlate with their periods of variation, making them excellent “standard candles” — objects that radiate a well-known amount of light. Once astronomers measure the distances to Cepheids in our Milky Way using trigonometric parallax, they can calculate the distances to other galaxies by watching their individual Cepheids brighten and fade.

The Hubble Wars appeared to wane in 2001, when the Hubble Space Telescope’s Key Project published an H0 of 72 with an uncertainty range of plus or minus 8. By using Hubble, Wendy Freedman (now at the University of Chicago) and her colleagues monitored Cepheids in galaxies out to about 80 million light-years. They then used these results to calibrate other distance indicators in galaxies out to about 1.3 billion light-years. At that distance, cosmic expansion dominates the speed of galaxies away from us, with little “contamination” from the motions of galaxies within their host clusters.

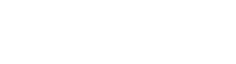

More recently, teams that employ the traditional distance ladder method have measured H0 values of about 73, consistent with the Key Project, but with greater precision. However, teams that study the cosmic microwave background (CMB), the leftover radiation from the Big Bang, are calculating H0 values of around 67. And all of these measurements have become so precise that their ranges of uncertainty no longer overlap.

The Hubble constant is 73

There’s still a lingering suspicion among many cosmologists that the Hubble tension will eventually disappear, a result of measurement or systematic errors. Although that position remains tenable, recent advances in measurement tools and techniques are pointing in the opposite direction. “There’s less than a 0.01 percent chance of this kind of difference occurring just by chance,” Riess says.

A case in point is the latest result from SH0ES — the Supernova H0 for the Equation of State — a large international consortium Riess leads. In 2018, the team published an H0 of 73.5 with an uncertainty of only 2.2 percent.

SH0ES uses the same distance ladder method employed by the Key Project, but it adds powerful new measurement tools. The most important are type Ia supernovae — white dwarfs that explode with a relatively uniform luminosity. Scientists have carefully calibrated their variations in intensity by studying how fast they brighten and fade, making them ideal standard candles. And these supernovae are incredibly bright, so they can be seen at far greater distances than Cepheids.

Riess and his colleagues are particularly interested in galaxies that are close enough for Cepheid monitoring but also have hosted type Ia supernovae in recent years. His team has analyzed 19 such galaxies to date, with another 19 to come, giving them independent distance measurements. But to cross-check their Cepheid and supernova results, SH0ES also uses geometric distance indicators, such as eclipsing binary stars in the Large Magellanic Cloud and water masers in the spiral galaxy M106.

And even the Cepheid distances in our own galaxy have become more accurate, thanks to extremely precise parallax measurements from the European Space Agency’s Gaia satellite and improved cameras on Hubble. SH0ES is getting virtually the same H0 as the Key Project, but its range of uncertainty has narrowed significantly. “What’s changed from previous generations is that the data quality is much better,” says Riess.

Yet another group, the Carnegie Supernova Project, is reaching an identical result. Its most recent paper lists two H0 values, 73.2 and 72.7, taken through different wavelength filters, with uncertainties of just 2.3 and 2.1, respectively.

Carnegie team leader Christopher Burns says his group uses the same Cepheid, eclipsing binary, and maser data that SH0ES does, but Carnegie employs a different method for analyzing supernova data and making corrections for variations in luminosity and the reddening effects of dust.

“We’ve done these corrections in slightly different ways with different assumptions and different data sets, but we’re coming up with the same answer,” says Burns. “So as far as supernovae are concerned, I’m pretty confident we’re doing the right thing.”

But Burns is quick to add that SH0ES and Carnegie work with the same Cepheid data and use similar methods to study them. That part gives him a bit of unease. “I would love to have another method of figuring out distances to these supernovae and making sure that agrees as well,” he says.

Adding confidence to the SH0ES and Carnegie results, the H0 Lenses in COSMOGRAIL’s Wellspring (H0LiCOW) group recently announced a new H0 measurement. Using a completely independent method, this international team has spent years watching the flickering brightnesses of distant quasars that are gravitationally lensed by foreground galaxies. Because the light from each lensed quasar takes multiple paths and different amounts of time to reach Earth, the H0LiCOW astronomers can derive H0 from the time delays. The team recently measured an H0 of 72.5, with 3 percent uncertainty.

“Throughout our analysis, we kept the Hubble constant blinded, meaning we never know what value we were getting throughout the entire analysis,” says H0LiCOW team leader Sherry Suyu of the Max Planck Institute for Astrophysics in Garching, Germany. “That’s important because that avoids confirmation bias. So it’s not that we subconsciously favor one H0 over another.”

The H0LiCOW result is beautifully consistent with SH0ES and Carnegie. In other words, all the teams that measure H0 in the local universe are getting the same result: about 73.

No, the Hubble constant is 67

Were it not for the CMB measurements, the Hubble constant would probably be considered a solved problem, and researchers would move on to other projects. But the CMB results are highly compelling despite the fact they are not direct measurements of H0. Instead, they are predictions of what H0 should be, given known conditions in the early cosmos and how the universe’s main ingredients influence cosmic expansion.

The material that gave rise to the CMB was forged in the Big Bang. For 380,000 years, the universe was a dense, opaque sea of electrically charged gas known as plasma. Sound waves coursing through this plasma caused matter to compress and rarify non-randomly into high- and low-density regions. These are now imprinted on the CMB as slight temperature irregularities. About 380,000 years after the Big Bang, the universe had expanded and cooled enough for electrons to combine with atomic nuclei to form atoms. This enabled the Big Bang’s remnant gas to radiate freely as light in all directions. Over the next 13.8 billion years, cosmic expansion has redshifted this ancient light into the microwave portion of the spectrum.

The precise mixture of dark matter and normal matter affected how those early sound waves imprinted the CMB with temperature variations. NASA’s WMAP satellite and Europe’s Planck satellite have measured these irregularities with increasing precision across the entire sky, with Planck providing the most sensitive map of all. A detailed analysis of the Planck data, combined with other data, enabled cosmologists to measure the universe’s contents as 68.3 percent dark energy, 26.8 percent dark matter, and 4.9 percent “normal” matter. When cosmologists plug these numbers into Einstein’s equations from the general theory of relativity, they predict an H0 of 67.4, with an uncertainty range of only 0.5.

“The CMB predictions for H0 assume that the contents of the universe are well described by atomic matter, cold dark matter, and dark energy. If this description is incomplete, the predictions could be in error, but there is no evidence for this,” says CMB researcher Gary Hinshaw of the University of British Columbia.

The Planck result is consistent with all other CMB studies. But it’s conspicuously lower than the H0 values measured by SH0ES, Carnegie, and H0LiCOW, and their error bars do not overlap.

“I have to confess that as someone whose professional training dates to the era of a factor-of-two uncertainty in H0, I have a difficult time becoming terribly agitated by disagreements of a few percent!” says Penn State University astronomer Donald Schneider.

But what happens if this tension over H0 persists?

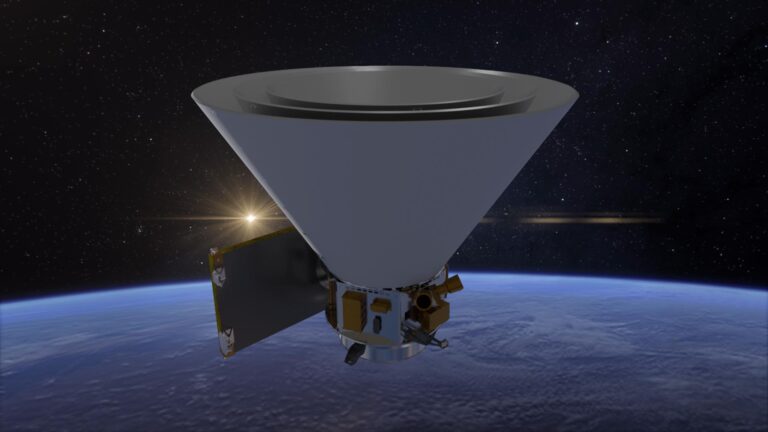

Astronomers studied 85 Cepheids in spiral galaxy NGC 5584 in Virgo to learn it lies 70 million light-years away. They then applied this value to the galaxy’s Supernova 2007af to help calibrate distances to these far more luminous objects.

A lot of unknown physics

If future observations fail to show that this tension results from measurement errors, it will throw a monkey wrench into the prevailing cosmological model, known as Lambda CDM. Lambda is a Greek letter that symbolizes Einstein’s cosmological constant, an unchanging property of space that exerts a tiny but inexorable repulsive force. The model thus tacitly assumes that the cosmological constant is the dark energy that is causing cosmic expansion to accelerate. CDM stands for “cold dark matter,” meaning that most of the universe’s mass consists of heavy particles that move relatively slowly.

Lambda CDM beautifully explains the cosmos and is consistent with virtually every parcel of astronomical data. The model assumes that the universe is spatially flat on large scales, meaning two parallel light beams traveling unhindered through intergalactic space will remain parallel over billions of light-years. It also assumes that Einstein’s general relativity explains the universe on large scales.

This model has been so successful that cosmologists would be loath to give it up, or even to make substantial modifications. But as Riess explains: “There’s a lot of unknown physics in that model.”

For example, we don’t know what kind of particle constitutes dark matter, or even if it is a particle. After all, numerous experiments to detect dark matter particles have come up empty. And we don’t know what is causing cosmic expansion to accelerate. It might be Einstein’s cosmological constant; it might be some kind of dynamical field that changes over time; or it might be something else.

“I don’t think we should be totally stunned if we can’t explain the dynamics of the universe across all of cosmic time to 1 percent when we don’t really understand the physics of 95 percent of the universe,” says Riess.

Hinshaw adds, “It’s a great surprise that Lambda CDM works as well as it does.”

A daunting challenge

The H0 tension presents a challenge to theorists. Although theorists are a creative lot, they can’t just concoct any idea to resolve this cosmic conundrum. “It’s really hard to change Lambda CDM in a way that actually fits this enormous suite of data from the early universe and the late universe in a way that works,” explains Princeton University physicist Joanna Dunkley.

“The consensus tends to be that if you’re looking for a source, it most likely involves something about the physics of the early universe,” adds Riess.

Vivian Poulin of Johns Hopkins University recently published a promising idea. He and three colleagues posit that a form of dark energy that modestly affected cosmic expansion infused the universe from about 20,000 to 100,000 years after the Big Bang. Poulin says this dark energy “could explain this mismatch in the measurements,” adding, “The beauty of the idea is that it’s not so exotic. We have already observed similar effects at different times.”

Another plausible idea is the existence of a fourth type of neutrino currently unknown to science. This ethereal particle, known as a sterile neutrino, would have increased the amount of radiation in the early universe. When plugged into Lambda CDM, the extra radiation increases the Hubble constant predicted from the CMB.

Both of these ideas could relieve the H0 tension without making radical changes to Lambda CDM. But other ideas would deliver more of a hammer blow.

For example, perhaps the overall spatial geometry of the universe is not flat after all. A non-flat universe would be dynamically unstable, however, and it would contradict CMB observations showing that the universe must be extremely close to flat. “It would be very unusual for the universe to be almost flat, but not quite, today. That’s hard to engineer,” says Hinshaw.

Or perhaps dark energy is not the cosmological constant, but is caused by some kind of dynamical field that changes over time. Poulin notes that such a field would have “exotic” properties because instead of diluting as the universe expands, it does the opposite. Although Poulin says such a field is “not absolutely impossible from a theoretical standpoint, people are not at ease with it. It’s a bit weird.”

An even more radical proposal is that we live in a region of the universe with an anomalously low density. Dunkley states the objection of many cosmologists to this idea: “It doesn’t make sense that our local region should be that strange compared to the rest of the universe.”

Relieving the tension

All the observing teams express high confidence in their methodologies and results. Fortunately, unlike the Hubble Wars of yesteryear, the modern discrepancy has not devolved into personal animosity or professional disrespect. Instead, it has motivated an insatiable desire to get to the bottom of a profound mystery. For observers, it means reducing their errors even further, down to 1 percent if possible. It also means new types of measurements.

For example, Freedman leads a large international group that will soon publish a new H0 using the distance ladder method. But instead of basing the result on Cepheids, her team is using Hubble to observe the most luminous red giants in the halos of distant galaxies, which cut off at a specific maximum luminosity. By observing in galactic halos, her team can make brightness measurements that are less contaminated by the light of background stars. Cepheids, in contrast, are young stars found in crowded galactic disks, where other stars contribute noise to the data. Red giants are also simpler objects than Cepheids, which have complex, dynamic atmospheres.

For these and other reasons, Freedman claims the red giants are more precise distance indicators than Cepheids, and they produce less scatter in the data. So far, her team has measured red giants in 17 galaxies that also have hosted type Ia supernovae. This new method provides “a completely independent, ground-up recalibration of type Ia supernovae. It’s pretty exciting,” she says.

Large survey telescopes will help astronomers precisely measure how the density variations in the early universe imprinted themselves on the large-scale distribution of galaxies. These signatures, known as baryon acoustic oscillations, will enable scientists to measure how cosmic expansion evolved during the universe’s middle ages, which in turn will help connect CMB observations of the early universe and distance ladder measurements of the modern-day universe.

Dunkley is now working with the Atacama Cosmology Telescope in Chile, which is making detailed measurements of the CMB’s polarization. This result will provide an independent H0 measurement. “We’ll be able to add our data to the Planck data and actually further shrink the uncertainty on the Hubble constant from the CMB, and then see if it’s even more different from the local one or whether the tension is reduced,” she says.

Further down the road, the LIGO and Virgo gravitational wave detectors will make their own H0 measurements. From just one event — the neutron star merger observed August 17, 2017 — LIGO scientists measured an H0 of 70, but with an uncertainty of about 15 percent. Once LIGO registers many dozens of neutron star mergers over the next decade, scientists should be able to calibrate them as “standard sirens” and nail down H0 to within 1 percent.

Right now, cosmologists calculate that the universe is 13.8 billion years old, based on Planck’s H0 of 67.4. But if H0 is actually closer to 73, it could shave hundreds of millions of years off the universe’s age, depending on what changes would be required in Lambda CDM. And more importantly, a resolution of the Hubble tension could also shed light on dark energy, which controls the universe’s ultimate fate. If dark energy is indeed Einstein’s cosmological constant, the cosmos will expand forever and lead to a Big Chill. But a dynamical dark energy could become so powerful that it would tear all matter to shreds in a Big Rip. And according to Hinshaw, “If the dark energy is unstable, it could decay into a new substance and change the laws of physics entirely, with unpredictable results.”

If the tension still exists after observers get down to 1 percent uncertainty, we’ll have “extraordinary evidence” that the tension is for real, says Hinshaw. This would necessitate changes in Lambda CDM, which would be incredibly exciting. He concludes, “The best scenario would be that all of this holds up and it points us in a direction that ultimately gives us more insight about the dark universe — the dark matter and dark energy — which would be fantastic.”