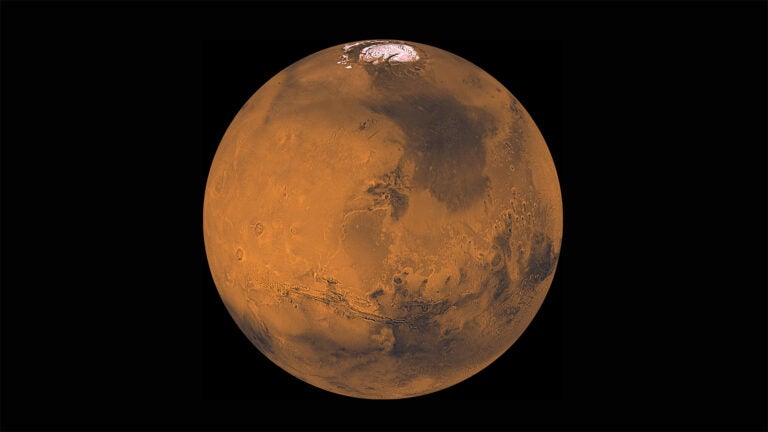

Mars rover Curiosity now has its own built-in curiosity.

NASA recently installed a new AI system called AEGIS to autonomously choose targets for its laser detection system.

The AEGIS algorithm analyzes images taken by the rover’s navigation camera (Navcam). AEGIS can select a target rock and pinpoint it with the rover’s laser system, ChemCam, before scientists on Earth have time to look at the images. ChemCam then determines what kinds of atoms the rock contains. The new autonomy is especially useful when Curiosity is in the middle of a long drive, or when there are delays in sharing information with scientists on Earth.

It’s not like the AI is coming for scientists’ jobs—most of the targets are still chosen by scientists without the aid of the AI. But Curiosity gets to exercise its decision-making software “a couple times per week,” says NASA Jet Propulsion Laboratory’s Tara Estlin, the AEGIS software lead.

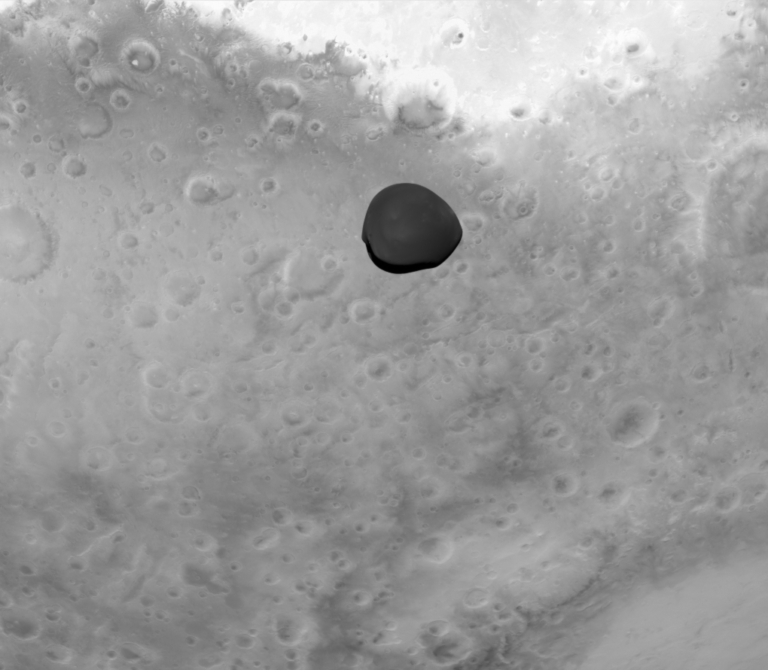

A lot of what NASA does on Mars is study rocks, so AEGIS is a rock detector. It uses computer vision to comb through a digital image, looking for edges of objects. When it finds an object with edges that go all the way around, it defines that as a rock. Then, for each rock, it analyzes the image to determine the rock’s properties, such as its size, shape, brightness, and how far away it is from the rover.

Scientists back on Earth set the criteria for which rock properties are interesting, depending on the terrain around Curiosity. “Maybe it’s larger, light-colored rocks; maybe it’s rounder, dark-colored rocks,” says Estlin. Currently, Curiosity is in an area with a lot of bedrock, or outcrops, which on Mars means flat rocks embedded in the surface, so the rover looks for those right now. AEGIS then ranks the rocks from most interesting to least and points the laser at the most interesting.

Before Curiosity, AEGIS first went up on Opportunity in 2010, but NASA had been developing the software for roughly 10 years before that, says Estlin, who got involved around 2002. “We first tested it on images on the ground,” she says. “We have some research rivers here that are built to test early software.”

The hardest part of adapting AEGIS, Estlin says, was making sure they could integrate it with Curiosity’s system, which is much more complicated than Opportunity’s, without interfering with anything else. For example, ChemCam has some important safeguards that keep it from pointing its laser at the rover itself (which would damage it) or at the Sun (which would damage the optical system ChemCam uses to identify atoms).

In the future, AEGIS will probably have further tricks up its sleeve. “We have another approach that we’ve tested on the ground that instead of looking for edges, it looks for statistical patterns of pixels,” says Estlin. “This lets you find a much larger variety of targets” such as ledges, or layering in bedrock. Layering is especially interesting because it tells us about the history of the planet.