While most people had little experience with charged coupled devices (CCDs) as recently as 10 years ago, digital cameras containing one are much more common today. Astronomers jumped on the CCD bandwagon early on — in 1979.

A CCD is an array of capacitors. These capacitors, which are referred to as pixels (short for picture elements), are just devices that can store electric charge. The pixels are arranged in rows and columns on a “chip,” and the chip sits at the focus of the camera. A 4-megapixel chip, for example, has 4 million pixels, arranged in 2,000 rows by 2,000 columns.

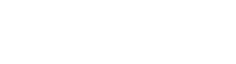

When the camera shutter is open, the camera’s (or telescope’s) optics focus an image on the chip. Each capacitor reacts to light, or photons, that falls on it by ejecting electrons from constituent atoms, causing the capacitor to accumulate a charge. When the shutter is closed, each pixel retains its charge, which is proportional to the amount of light that struck it.

A computer program must then measure the charge pixel by pixel and in the correct order. First, it reads out the bottom row of pixels one at a time, by electronically manipulating the voltage between each pixel. Then, it shifts the next row down one row to read out that one. This process is repeated until every pixel has been measured. The result is an array of numbers, and each number is a measure of the brightness in a pixel.

Software can transform this array of numbers into a black and white image, but how do we get color? For most astronomical pictures, we take multiple exposures of an object through a series of different colored filters placed in front of the camera — for example, red, green, and blue. The red-filter image is read out, then the green-filter one, and so forth. We then assign colors to each picture and combine them, which explains why color can vary dramatically depending on how a user processed an image.

National Optical Astronomy Observatory, Tucson, Arizona