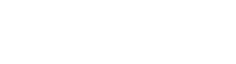

The details aren’t visible in the individual images, but they are present. You can’t see them because they are hiding in the noise. Combining images reduces noise, and the details become visible.

This sounds like magic, but it’s really just mathematics at work. Noise isn’t a “thing” in the usual sense. It is a measure of uncertainty in the data. In each image, there is a large uncertainty in the brightness value of each pixel. Some pixels are brighter than they should be, and some of them are fainter than they should be. The range of these variations is a measure of the noise present in an image.

Most of the noise comes from two sources: read noise and shot noise. Read noise occurs each time you read an image out of the camera. Shot noise accumulates over time just like the image signal does, but the shot noise is equal to the square root of the signal. So if you double the exposure time, the signal increases by a factor of 2, but the noise increases only by the square root of 2, or 1.4. For longer exposures, signal increases faster than noise, uncertainty goes down (each pixel’s brightness is more accurate), and details become more visible. In practical terms, any brightness difference that is smaller than the noise level will be lost to view. Lowering the noise level with a long exposure time effectively brings out these brightness differences.

You can get a long total exposure time either with a single long exposure or by adding multiple short exposures together. The read noise is included only once for a single long exposure, but “n” exposures will add “n times the read noise” to the final result. So “stacking” (combining) images is a little noisier than a single exposure, but it is much more convenient — especially if you are gathering data on multiple nights. Short exposures also are less risky, because something like a passing satellite will only ruin one of the short exposures. — RON WODASKI, THE NEW CCD ASTRONOMY, www.newastro.com/ipb