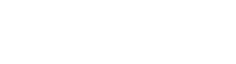

For driving, Curiosity takes a series of stereo images around the rover with its navigational cameras (NAVCAM) when it finishes moving for the day. The rover team makes a mosaic from the overlapping images and projects it onto the ground. We then compare this ground-projected image, called an orthophoto, with the base map. We look for similar rocks and ridges in each image and adjust the rover center to a point where the features overlap. The science team locates all other features, like rocks or outcrops that they’re interested in, relative to this fixed position. We can calculate these features’ positions down to the accuracy of the NAVCAM images, which can reach millimeter precision within a few meters of the rover.

Curiosity also carries an inertial measurement unit (IMU) that gives positional information to help locate the rover, both distance traveled and roll, pitch, and yaw just like an airplane. However, we use “ground in the loop,” i.e. humans, to verify and correct errors in drive position after long drives or in cases where we have lots of slip due to sand or skid from steep slopes. While we have many sophisticated instruments aboard the rover, visual triangulation serves us well to keep the rover on the “straight and narrow” as we head toward our science destinations.

NASA Jet Propulsion Laboratory

Pasadena, California