Modern astronomical observatories send us an enormous amount of data, which is generally examined and shared in the form of images. But what if the myriad forms of information we receive from space could be converted into sounds that inspire, entertain, educate, and enlighten? And what if, more than that, these soundscapes could offer access to the visually impaired and aid in new cosmic discoveries?

Welcome to the field of astronomical sonification.

Sonification simply refers to the conversion of data into audible sound. Initially, I wondered if I would be able to find enough content to write an article on the topic. Instead, I found volumes on how this field is changing the landscape of human understanding and discovered that the auditory dimension of data offers a powerful perspective — enhancing our ability to perceive, discriminate, and respond to complex information in parallel with the field of data visualization

The sonification field has grown quickly. There are now conferences, online communities, podcasts, documentaries, live musical performances, commercial recordings, and community engagement opportunities in the U.S. and internationally. When I reached out to David Worrall, a prolific writer on the topic and past president of the International Community For Auditory Display — which was formed in 1992 with a “singular focus on auditory displays and the array of perception, technology, and application areas that this encompasses” — he warmly welcomed me to “their little corner of the world”.

Little did I realize how big that little corner really is.

Turning data into sound

The roots of sonification (a term that emerged in the early 1990s) is widely credited to the 1908 invention of the Geiger counter and its audible interpretation of data — in this case, ionizing radiation —into clicking sounds. By the 1930s, electroencephalogram (EEG) sonification was used to complement visual analysis of brainwaves.

Sonification has come a long way since then. But the evolution that began accelerating the crossover into astronomy is attributable to the advancements in computing when early pioneers, such as physicist and musician Iannis Xenakis, experimented in the 1960s with converting data into sound using algorithmic composing. Today, the appetite to push this discipline forward is palpable and conversations often reference The Sonification Handbook (Logos Verlag, 2011), which explores its theory, methods, and applications.

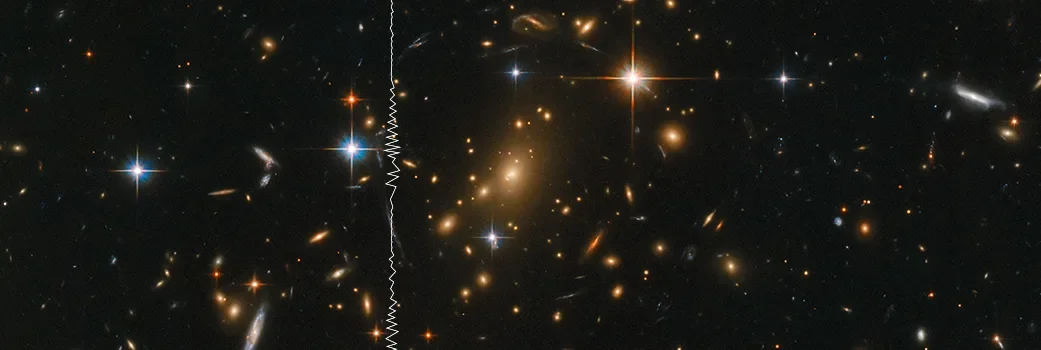

A leader in this field is Kim Arcand, a visualization scientist and emerging technology lead for NASA’s Chandra-X Observatory. In 2020, experts at the Chandra X-ray Center began the first ongoing, sustained program at NASA to sonify astronomical data, supported by NASA’s University of Learning, operated by the Smithsonian Astrophysical Observatory, and led by Arcand. She explains that “in the case of Chandra and other telescopes, scientific data is collected from space as digital signals and turned into visual imagery. The sonification project takes this data through another step of mapping the information into sound.”

The excitement Arcand exhibits about this topic is undeniable as she mentions how astounded she is by the number of fields that are using sonification as a tool for scientific analysis and scientific communication. She cites geologists, volcanologists, oceanographers, and medical researchers as examples with a consistent theme: thatby listening to sonified data, researchers can identify patterns, trends, and anomalies that might not be discernible through visual analysis alone.

Another passionate advocate of sonification is Wanda Diaz Merced — an internationally recognized expert in the field, whose work has taken her around the world. In a speech about sonification at the United Nations, Merced succinctly said “It’s about [using] sound to perform a more rigorous exploration of the information.”

Merced is currently an astrophysicist, computer scientist, and professor at the Universidad del Sagrado Corazón in Puerto Rico. Merced lost her sight as a young adult but discovered that she could use her ears to detect patterns in stellar radio data that could potentially be obscured in visual and graphical representations. “I use audio to augment the perception of signatures in the data or changes in the information that by nature are blind to the human eye,” she says.

When asked what her favorite sonification is, Merced’s quickly replies, “the human voice.” Indeed, if we consider “sonification” to be the general translation of any form of information into sound, the human voice does exactly that, communicating information through pitch, tone, rhythm, and volume.

Composing the data

The emergence of new technology and computing tools used for musical compositions has created palates of sounds with more depth, color, and choices than ever before. However, choosing the correct sounds is not as random as simply listening to a sonification might imply.

As part of Arcand’s team, astrophysicist and musician Matt Russo and musician and sound engineer Andrew Santaguida have created numerous sonifications of astronomical images from data derived from the Chandra X-ray Observatory and the Hubble and James Webb space telescopes.

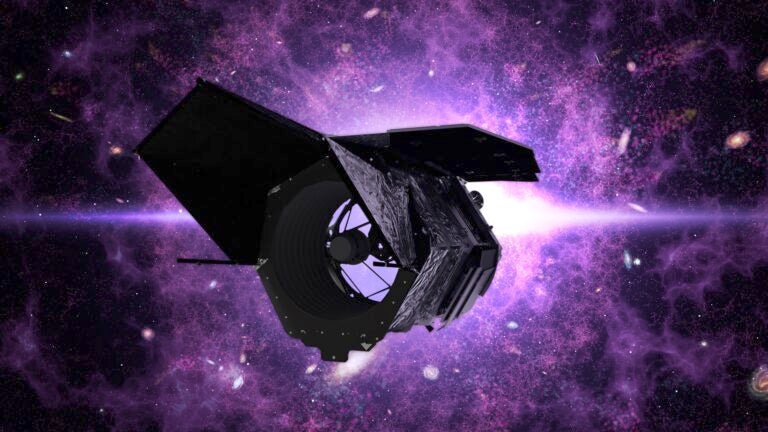

My interview with Russo went deep into the astoundingly complex process as he emphasized how the data drives the direction the soundtrack takes. While some of the initial data comes to him in raw form from multiple space organizations and publicly available information, celestial images that have already been created serve as the basis for most of his sonifications. The next step is to digitally separate an image into different layers so parameters such as brightness, color, temperature, etc., can be mapped to audible properties such as pitch, volume, timbre, and duration. Then, a direction must be chosen — sonifications can move from left to right, top to bottom, from the inside out, or the complete opposites. “The image determines the most effective approach to achieve the best outcome in its contextualization,” Russo says.

Context clues

When listening to a sonification, you might think that you should be able to close your eyes and envision the image as the audio unfolds. And while the sounds will most certainly create a mental image, context and a defined “legend” are vital. Even after an image of a nebula is described to a blind or low-vision person, for example, the question remains as to what the different sounds represent. Is starlight intensity represented by tone or volume? What musical textures are being used to suggest what the nebula looks like? What do whooshing effects or percussive sounds indicate? And, importantly, in what direction is the sonification unfolding?

For some in the field, this information is conveyed before listening to a sonification. But in Merced’s case, she prefers not to “allow anyone to describe the image or the sonification to me, with the exception of possibly the name of the image,” before she listens, she says. “It’s my one-on-one time with data and I don’t want to be biased. To me, it’s like I’m meeting someone for the first time, and that first communication is very special.”

Merced also mentions there are scientific benefits of sonification training with data that is simulated, “with listeners searching for hidden events placed at difficult spots or where attention may be compromised.”

She also encourages “the use of perceptual experiments of hiding signatures in different parts of the data and then testing which timbers create the greatest perception.”

Russo explains that “short and understandable sonifications reduce the cognitive load … if it’s too complicated, the information trying to be communicated may be lost. Everything can’t be represented, so you have to isolate the key elements.” He notes that he has to take into consideration that the “human auditory system responds to different types of sounds and different frequency ranges. Our ears are much more sensitive to changes in frequency than to changes in volume.” He adds that “considering how long a pattern remains in someone’s musical memory is also a factor.”

Merced emphasizes that the listener’s aural sensitivities need to be taken into consideration, based on a person’s ability to discern groups of timbres. She adds that “if you can listen to different groups of timbres playing simultaneously, then a complex sonification will be more effective. Otherwise, you should listen to single-instrument sonifications.” Her studies have found that using inharmonic instruments (those that are not harmonic, or dissonant) such as percussive sounds, increases sensitivity to events in the data. And Arcand adds that both the speed at which the sonification unfolds and keeping sounds in comfortable ranges are critical.

An extension of the team led by Arcand includes Christine Malec, a blind astronomer, musician, and consultant for NASA. Malec’s first reaction to hearing Russo and Santaguida’s sonification of the TRAPPIST-1 exoplanet system was “goosebumps,” she says, as she experienced a visceral encounter. Malec says that while she appreciates complex sonifications, her preference is for ones that are “simpler and cleaner because they’re easier to parse.”

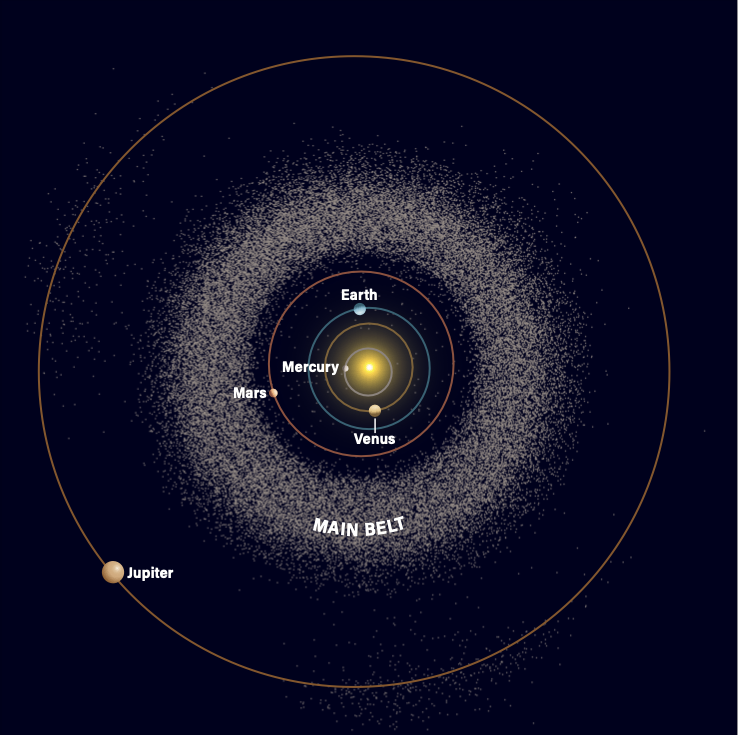

Unlike some 90 percent of the sonifications available for listening, which require an interpretation of the image to create the soundscape, the TRAPPIST-1 sonification uses actual orbital frequencies, scaled into the human hearing range, to produce a true representative sonification. This process was also used for the sonification of the Perseus Galaxy Cluster black hole, which generates pressure waves equivalent to 57 octaves below middle C. Its sonification boosts these waves into our hearing range of 20–20,000 Hertz. “One of the best [sonification] examples for me is the Chandra Deep Field South,” Arcand says. “It is so unexciting looking, but scientifically, I love it. It’s the deepest X-ray image ever taken and represents black holes and galaxy systems reaching back early in time.”

A richer experience

The benefits of sonifications are significant for those who are blind or have low vision. Linking the sense of hearing with tactile, 3D-printed models of celestial bodies further allows them to simultaneously “feel” the shapes and structures of the sonified image.

But the allure of sonification reaches beyond simple necessity. The public’s appetite for the addition of sound to extraordinary space images has resulted in millions of online views, which is not lost on space agencies’ public relations departments. Sonifications not only raise awareness about astronomical research, analysis, and communication, but also encourage a deeper appreciation for the cosmos. My inquiry to The European Space Agency’s headquarters in Paris, France, as to whether they were using sonifications as a tool for data analysis and public outreach was met with a resounding “yes” — with links to their work in this field and an invitation to speak with the relevant scientists.

And what about the use of AI in the field of sonification? My questions about this topic garnered a unified response: that while sonification can benefit from the use of AI to increase the speed of data processing, choosing the desired mapping for the right sonic result will remain firmly in human hands. Russo explains the latter process as “needing to find the intuitive match for the data.” He cites his 30 Doradus (the Tarantula Nebula) sonification, which includes transient sounds with less defined tonality because of the ambiguity of the image itself.

Merced sees another, deeper use for AI in sonification: as a complementary tool to look for unseen astronomical signatures, based on sound parameters within new audio analyses.

When I questioned the financial support needed to maintain advancements in the sonification field, Arcand says she hopes that the groundwork that has been laid for this type of data expression will prove to be worthy of ongoing commitments by NASA and others. Russo echoes this with his hope that the future will continue to validate the contributions made by this field, and suggests that an undeniable discovery made by sonification will be a catapult for elevated public awareness and support.

As technology advances and interdisciplinary collaborations grow, the future of sonification in astronomy absolutely promises exciting developments that will further illuminate the mysteries of the cosmos through sound.