On an ordinary day about 2.6 million years ago, a new light appears in the sky. Our ancestors, who spend at least some of their time in trees, probably notice it but gradually lose interest as the novelty wears off. It’s pointlike and brighter than the Full Moon, but it quickly fades from view during the daytime. It remains bright enough, however, to light up the night for several weeks or months, denying our ancestors a good night’s sleep.

Nothing much more happens until about a hundred years later: Anyone who could reason and keep records would notice that the rate of lightning has increased a lot.

The lightning ignites fires. In the Great Rift Valley in East Africa, where humans’ ancestors are living, forests are converted to grassland, forcing inhabitants to walk from tree to tree. There may be long pauses between periods of increased lightning activity, so the area transitions from grassland to scrub and back again, challenging these early humans to adapt.

I’ve written about the generalized threat from astrophysical radiation events. Nearly all the work in this field has been a “what if” game. Scientists know there must have been radiation events, just based on the odds. From astronomical observations, we can infer the average rate of supernovae, gamma-ray bursts, and solar outbursts. From this rate, we can infer the likelihood of such an event close enough and powerful enough to affect life on Earth. We expect serious, life-threatening events every couple of hundred million years or so, on average.

We know there have been mass extinctions and sudden changes in Earth’s climate. But because these events left few clues behind, for a time we could only speculate about any connection between events on Earth and those in space.

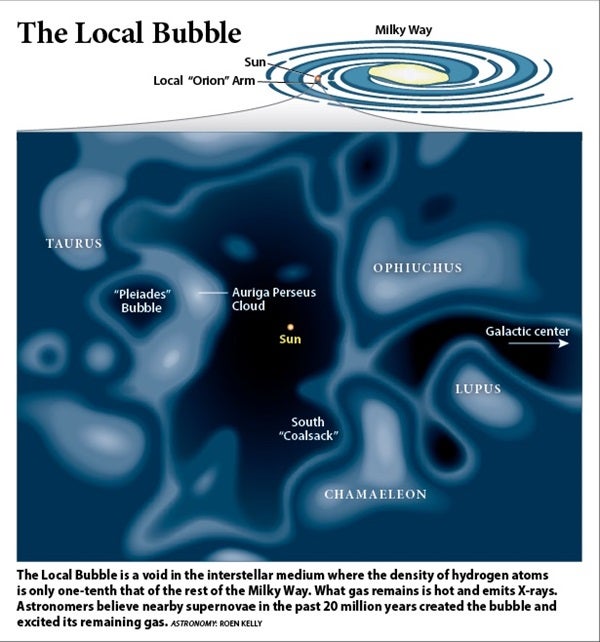

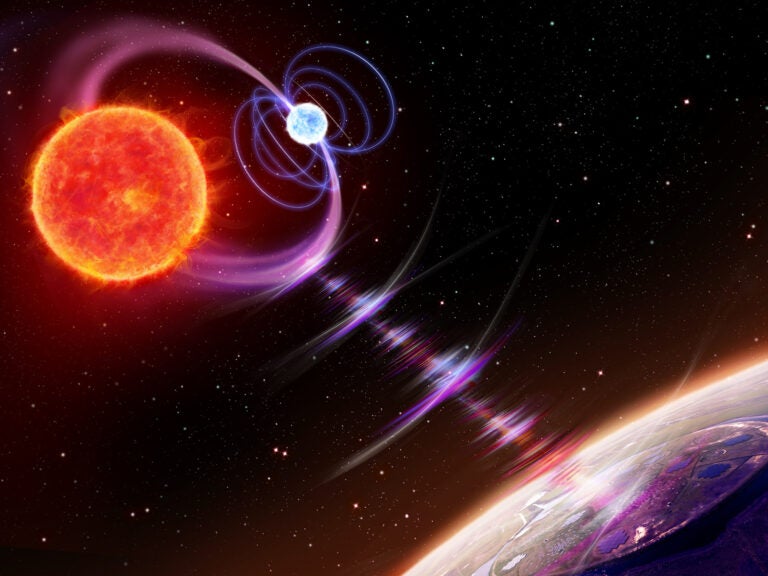

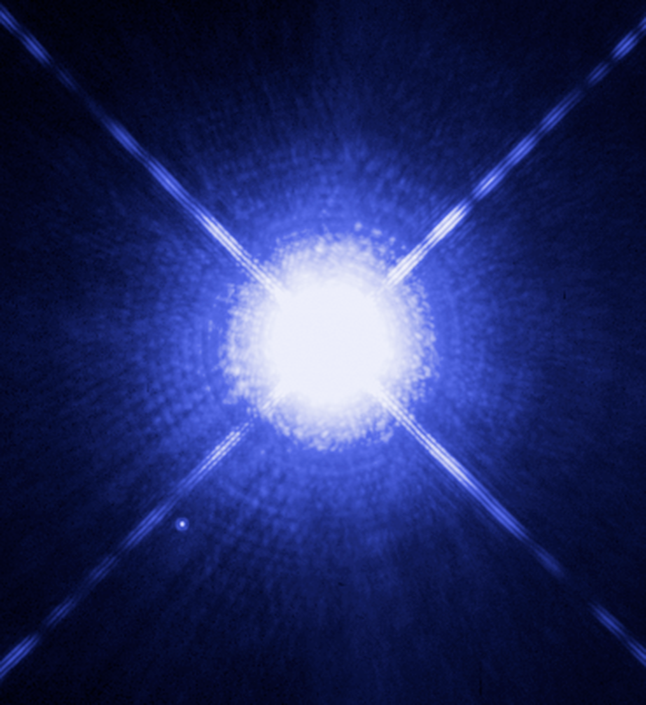

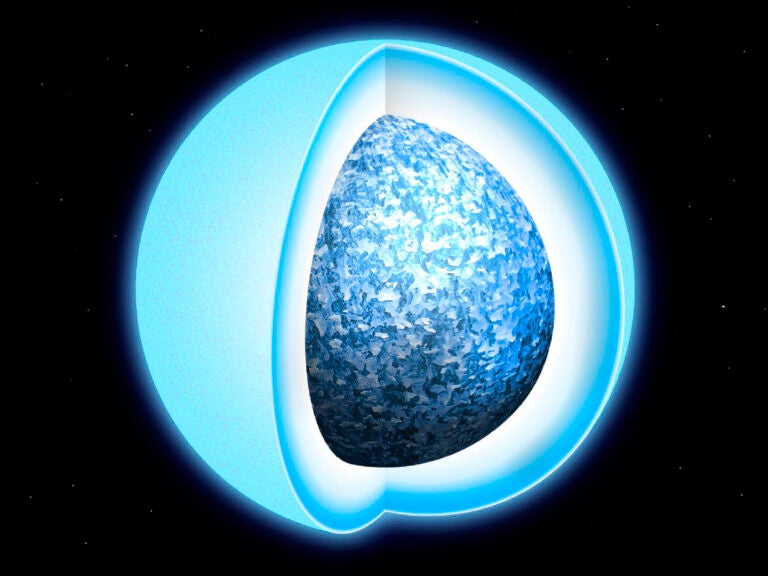

Supernovae, the explosions of stars, have been the main focus. A really nearby event — 30 light-years away or closer — would induce a mass extinction from radiation destroying the ozone layer, allowing lots of ultraviolet radiation through to damage life on the surface. It has probably happened a few times, based only on the rate of supernovae, but we don’t have any direct evidence. Somewhat more distant supernovae go off more often, but scientists assume the effects on Earth would be similar, though weaker.

In the past year, everything has changed. Researchers like Brian D. Fields at the University of Illinois at Urbana-Champaign and his colleagues have already predicted that a supernova near enough to affect Earth would dump some radioactive residue here that we might detect in ocean sediments. There had previously been some claimed detections, but many doubted them because the work is literally at the level of counting atoms! I wrote about more detections in Nature in 2016. These were data from the ocean bottoms in a variety of locations around the world. That work was followed by others who reported data from the remnants of fossilized bacteria, from the Moon, even from cosmic rays, all finally creating a consistent picture.

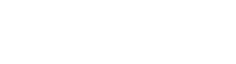

That picture is based on detection of iron-60, an isotope. The dominant, stable form of iron is iron-56, whose nucleus contains 26 protons and 30 neutrons. Iron-60 has four extra neutrons. It is radioactive and decays with a half-life of 2.6 million years. Because our planet is 4.5 billion years old, no original iron-60 should be left on Earth, unless it came from space. Iron-60 is created in supernovae, so identifying times of great increase in the isotope is a good sign that supernovae have gone off not too far from Earth. On that, everyone agrees. Researchers can date the age of the event from the age of the sediments in which iron-60 is found. All recent publications agree that something happened 2.5 million to 2.6 million years ago at a distance between 150 and 300 light-years from Earth.

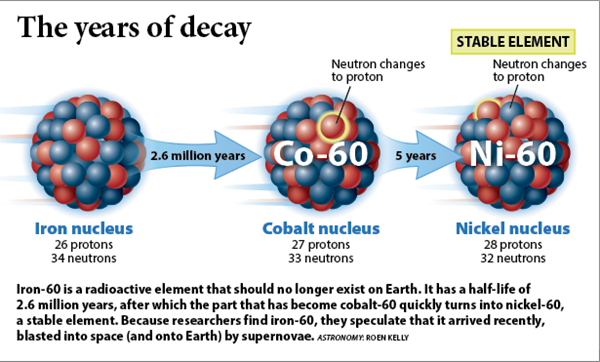

Beyond that, there is no consensus. There are indications of another event 7 million or 8 million years ago, also near enough to deposit iron-60 on Earth. In space, the iron would pass Earth as part of the blast wave and be deposited for only a short time. But such events are spread out in time. One interpretation suggests that the dust grains containing iron-60 were caught up in interstellar clouds, which confined them or modified their trajectory, keeping them in our neighborhood so they could fall to Earth more than once. Another idea is that a lot of supernovae occurred at various distances, as many as a dozen or more. This concept would explain the extended deposits on Earth and also sounds reasonable, considering the energetics needed to form the Local Bubble.

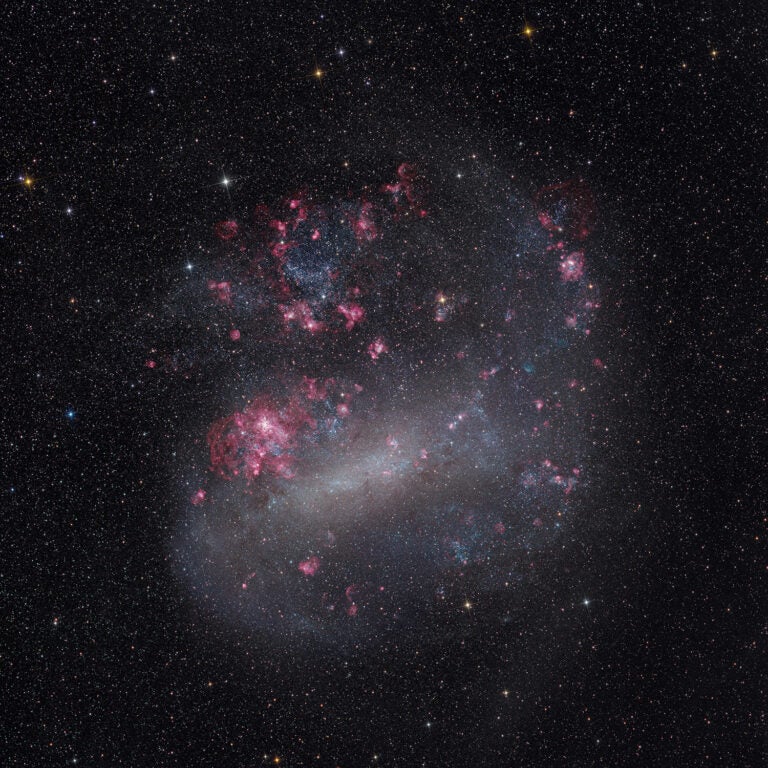

It may sound unreasonable to have so many supernovae all going off in the same area at nearly the same time, but it isn’t. The massive stars that make type II supernovae are often born in associations, and therefore clump together. The Orion Nebula (M42), a favorite of amateur astronomers, is a large example of this.

The stars that make the powerful supernovae have fairly short lives, so a group that is born together with the same starting mass will tend to explode more or less together. Astronomers estimate that the stars that dump the iron-60 each contained about 10 times the mass of the Sun, and should live only a relatively short 30 million years. This line of thinking demonstrates that the idea of a chain of explosions is reasonable, but by no means proven.

For the first time, instead of general expectations, we have a definite event to discuss. It was not close enough (30 light-years) to generate a mass extinction but close enough to affect Earth. Compare this with historical supernovae thousands of light-years away — the ones with written records, allowing us to find their remnants in the sky from the descriptions of their locations. For the events that dumped iron-60, we don’t have such information. Although there is a lot of uncertainty about how many supernovae occurred in this series, the last one clearly happened about 2.5 million years ago, at a distance of 150 to 300 light-years. It gives us something to work with. My group has been working out what kind of effects we should expect.

Interestingly, Charles Sheffield wrote a pair of science fiction novels, Aftermath (1998) and Starfire (2000), in which he portrayed a nearby supernova with surprisingly accurate descriptions of many of the effects that our group has calculated. Later, when mineral evidence was found for the dinosaur-killing asteroid or comet, researchers also had been looking for evidence of a nearby supernova. So all of this is not new; the issue has been considered at least since 1950. Still, what we have found recently surprised us because the important effects turned out to be different from the ones usually discussed.

Hazard lights

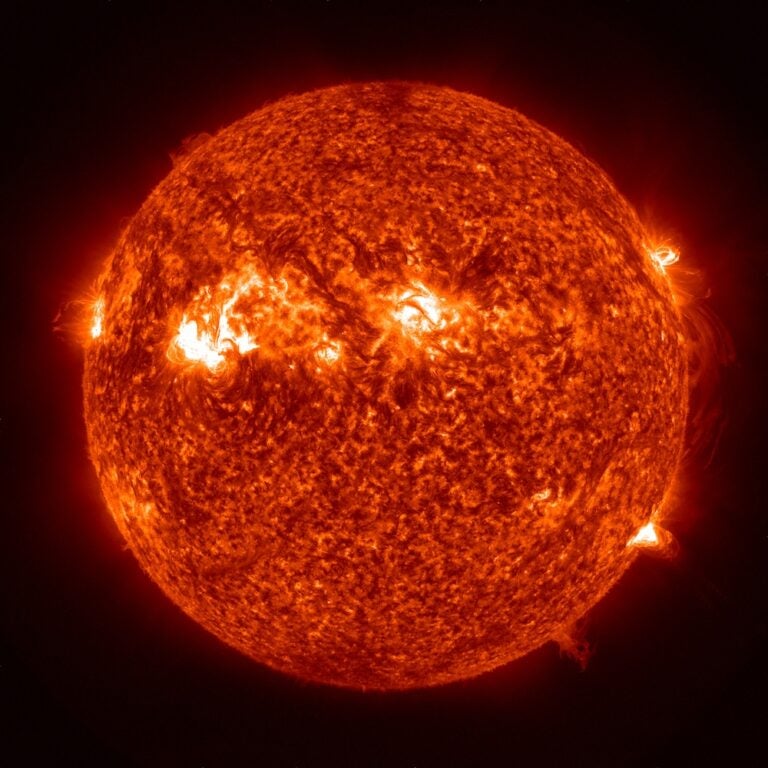

First, we looked at the effects of blue light generated by the supernova. It sounds silly, but insomnia would be a hazard if the event were visible on Earth’s night side. It turns out that the blue wavelengths of light are not at all healthy for sleeping creatures. (Get rid of any blue LED alarm clocks!) Both the intensity and color of such an object in the night sky would be detrimental to sleeping animals, but only for a few weeks at most.

A more commonly discussed hazard is ozone depletion in Earth’s atmosphere, resulting in a big increase in ultraviolet light at ground level. This is a side effect of radiation breaking up nitrogen gas (N2) in our atmosphere. The chemical bond is so strong that life on Earth has generally lived with a nitrogen shortage. (It can’t be used unless atomic nitrogen [N] is freed from the molecule.) Most radiation hazards break up the nitrogen in the stratosphere, after which the freed nitrogen makes compounds with oxygen, thereby destroying ozone (O3), which is converted to ordinary oxygen (O2).

Ozone in the stratosphere blocks the part of the ultraviolet spectrum called UVB, whose wavelengths are between 380 and 420 nanometers. UVB can cause severe burning of the skin. It gets absorbed by protein and, most importantly, DNA — the localized burst of energy breaks chemical bonds and can lead to cancer and mutation. For many decades, the disaster scenarios of the effects of nearby supernovae have hinged on this effect. It turned out not to matter in this case. To explain why, we have to talk about cosmic rays.

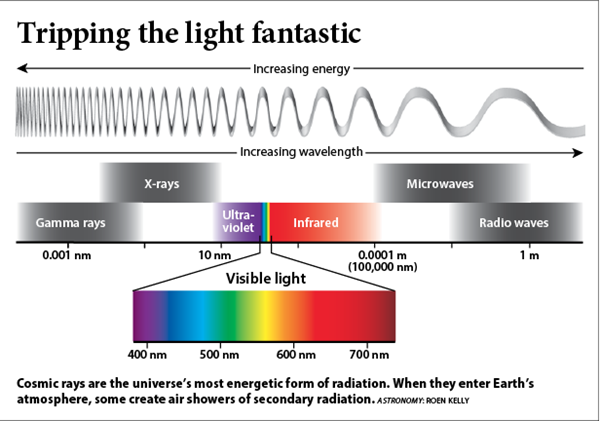

Cosmic rays are protons or atomic nuclei that have been accelerated to high energies. They are different from cosmic neutrinos and gravitational waves, which have next to no effect on us, and electromagnetic radiation (photons). Electromagnetic radiation includes radio waves, microwaves, visible light, X-rays, and gamma radiation. We get a lot of energy, more than a kilowatt per square meter at the top of our atmosphere, as sunlight. Electromagnetic energy forms the basis of our understanding of the universe. Cosmic rays do, too. And a large amount of research focuses on them.

About 90 percent of cosmic rays are protons, the nucleus of the hydrogen atom. That makes sense because hydrogen is the most abundant element in the universe. But the other 10 percent of cosmic rays are the nuclei of heavier elements. Their energies are customarily measured in electron volts (eV), the amount of energy an electron gets after moving through 1 volt of electrical potential.

The cosmic ray spectrum we observe peaks at a few hundred million eV. That sounds like a lot, but because it’s less than the equivalent of the mass of a proton, cosmic rays travel slower than the speed of light. Also, their energy is small enough that Earth’s magnetic field can deflect them. Because low Earth orbit lies inside the field, most of our astronauts have considerable protection. From this energy on up, the flux of cosmic rays decreases. The highest-energy ones have an amazing 1021 eV, but on average go through a given square meter of area less than once a century. Good thing, too: These have the kinetic energy of a Major League Baseball pitch packed into one atomic nucleus!

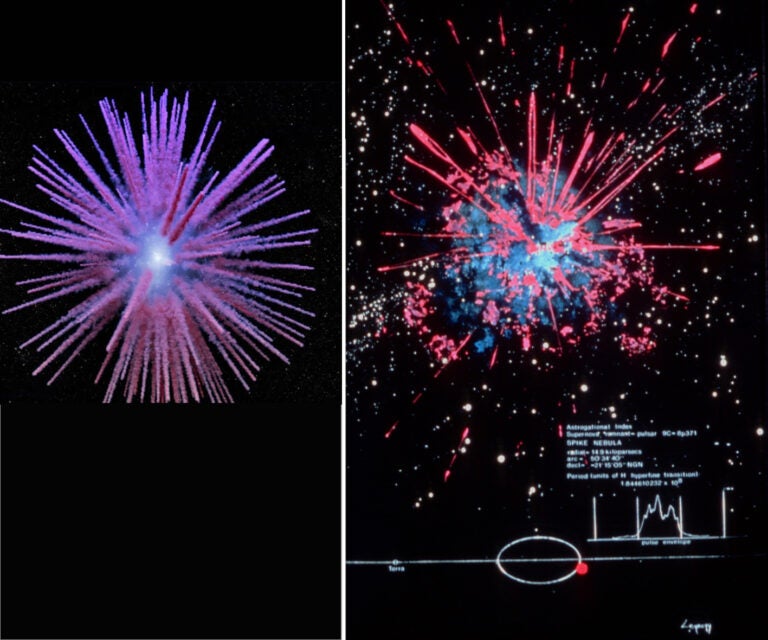

Multiple sources of cosmic rays exist, but evidence indicates that supernovae are a major source up to about 1015 eV. What we detect on Earth is a kind of average over the many cosmic ray sources. Because magnetic fields deflect charged particles, the Milky Way’s magnetic field scrambles most of their trajectories, so there’s no way to tell where they came from. However, the cosmic ray background will increase if there is a nearby event. Scientists are working out detailed calculations to decide how much the background would go up.

Our atmosphere protects us from cosmic rays even more than Earth’s magnetic field. The magnetic field tends to deflect cosmic rays to the poles, but the atmosphere soaks up a lot of their energy. A typical cosmic ray has its first collision high in the atmosphere, and it undergoes many more on the way down. By comparison, the first collision in Mars’ thin atmosphere happens near ground level, making the radiation load there about the same as in space. This is a nearly fatal flaw in Andy Weir’s excellent novel The Martian and many other schemes for colonization.

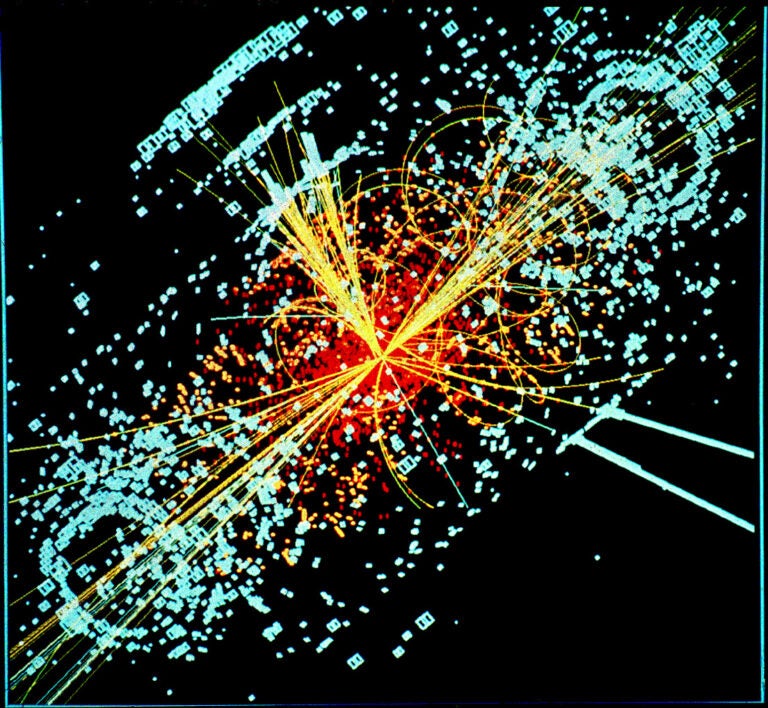

Within Earth’s atmosphere, an air shower cascade occurs. Particles collide with other particles, producing new particles. Energy is lost because molecules in the atmosphere are ionized, which may lead to ozone depletion in the stratosphere described earlier. The result is that here on the ground, we don’t get much radiation.

One thing we do get is muons. Think of these particles as a kind of “heavy electron” penetrating all the way to the ground. Muons are unstable, and most decay into electrons within a couple of microseconds. Still, most of the muons produced in the upper atmosphere reach the ground, about 10,000 per square meter every minute. They even penetrate a few hundred meters of rock or water. They mostly pass through us, but some interact, and there are so many of them that they account for about one-sixth of the total radiation we get, on average.

Most cosmic rays lose their energy by the time they get into the stratosphere. However, the ones with the highest energy penetrate much farther and produce many more muons, which reach farther still.

Scientists have done computations of the cosmic ray flux at Earth, assuming different kinds of local galactic magnetic-field configurations. The case we’ll focus on here is a weak, tangled magnetic field assuming that it lies inside a Local Bubble blasted out by earlier events. We take a type II supernova of typical energy that releases cosmic rays up to 1015 eV. Then we compute the path of these cosmic rays of various energies to Earth.

Within 100 years, Earth is inundated by cosmic rays. The intensity is anywhere from 20 to 100 times the normal flux at high energies, depending on the distance to the supernova. At low energies, the flux doesn’t change much. This is crucial to understanding the effects.

Effects of rays on Earth

As mentioned earlier, the flux of muons on the ground goes up. This increases the radiation load, but not catastrophically. It might explain an apparent acceleration of mutations over the last few million years. It might enable some acceleration of evolution. And it might increase the cancer rate a bit, but it would be hard to see evidence of this in the fossil record.

Our recent computations suggest that the radiation dose from muons may go up by a factor of 100 or more for some time. The biggest change would be for large organisms in the upper ocean because of the penetrating ability of muons. It is tempting to think that there is some relationship between the supernovae and a recently documented marine megafaunal extinction at about the same time. This included Megalodon, which resembled a great white shark the size of a school bus.

What about ozone depletion, the old boogeyman? It doesn’t show up at any significantly damaging level. Researchers are used to dealing with ozone depletion scenarios from solar events and from gamma-ray bursts. In both cases, the radiation ends up mostly in the stratosphere, where it can easily interfere with the ozone layer there.

What we have found is that the high-energy cosmic rays from such a supernova pass right through the atmosphere. Instead of depositing their energy 20 to 30 miles (32 to 48 kilometers) up, they dump most of it about 7 miles (11 km) up, and still enhance ionization right down to the ground. This is in the troposphere, where weather happens and where about 75 percent of the mass of the atmosphere resides. People have not been used to thinking about radiation from supernovae affecting the troposphere. So there is not much effect on the ozone layer unless, of course, the supernova is much closer than the ones that astronomers have documented.

The spark of an idea

We expect the biggest effect to be on lightning. Lightning starts when there is a big voltage difference between two regions, either within the atmosphere or between it and the ground. But lightning can’t get started by itself. It must rely on a leader — a path of increased ionization in which an electric field can accelerate free electrons. This sets up a growing cascade in which the accelerated electrons knock other ones loose, and you get a current that grows into a lightning bolt.

But where does the leader come from? Atmospheric scientists think the main mechanism is paths of ionization left by cosmic rays. So, a twentyfold increase in tropospheric ionization should lead to a big increase in cloud-to-ground lightning (because most of the cosmic ray tracks are more or less vertical).

The big change would be that ordinary storms would produce a lot more lightning. In normal conditions, lightning is the main ignition source for wildfires. Wildfires kill trees and other woody plants; more fires mean fewer trees and more grassland. The American Great Plains was largely kept as grassland by lightning-set wildfires. Native Americans set fires to renew the grass, which attracted bison. Even today, ranchers there conduct controlled burns on their rangeland. During the past few millions of years, there has been a conversion in many places, including the Great Rift Valley in East Africa, from forest to grassland.

Finally, we can speculate on the consequences. The conversion from trees to grassland may have forced our ancestors out of trees and down to the ground, walking and using their hands. Once the cosmic rays (and consequent lightning) slack off, forest tends to replace grassland until a new burst of cosmic rays creates increased lightning. If this happened, it would require our predecessors to use their brains to adapt to a new environment.

And so it would go.