Hubble’s future as an astronomical tool still hangs in the balance. Last December, the National Research Council’s Committee on Assessment of Options for Extending the Life of the Hubble Space Telescope found robotic servicing too risky. The panel recommended astronauts service Hubble as soon as possible once the space shuttle returns to flight. The American Astronomical Society, the world’s largest professional organization for astronomers and astrophysicists, backed this decision, lauding Hubble as “the most productive telescope since Galileo’s.” O’Keefe announced his departure as NASA chief the following week.

Beckwith says that careful management of Hubble may prolong its life into 2009. However, no funds for either servicing option have been allocated in NASA’s 2006 budget.

Beckwith spoke with Astronomy in September 2004, as astronomers unveiled their first analyses of the Hubble Ultra Deep Field (HUDF).

Beckwith: We absolutely had to get these very deep optical images while it was still possible. And I’m glad we did, because Hubble may not be around long enough to do it otherwise. I think the Ultra Deep Field is going to remain a real legacy for the future … a necessary tool for any analysis that will be done by [the] James Webb [Space Telescope], by Hubble with another infrared camera, or Spitzer, or any new satellite. If you look at it, it’s really quite beautiful.

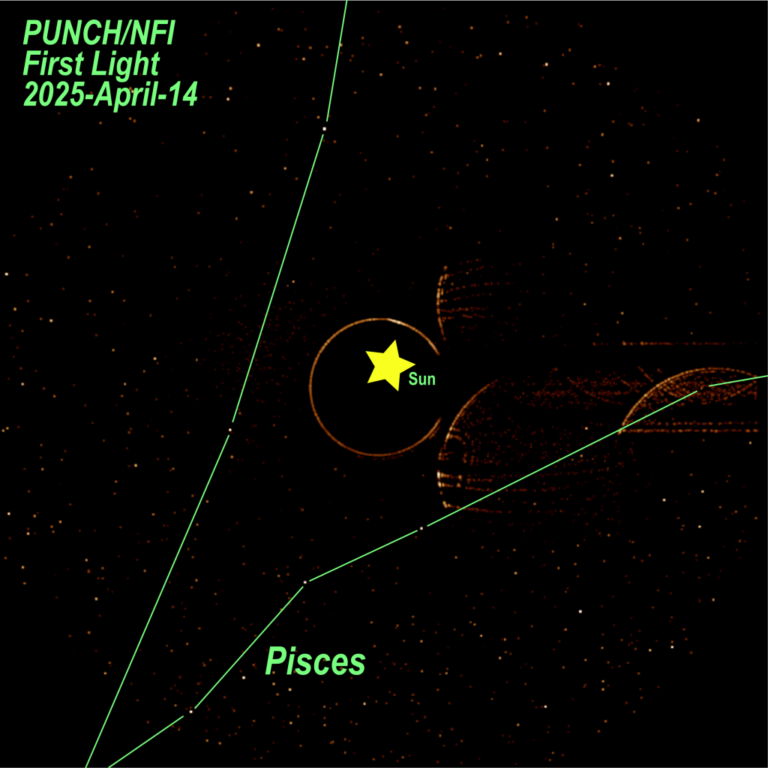

B: There’s no doubt that we are seeing a population of very red objects that are very distant. You see them only in the longest wavelength, what we call the z filter, at about, 9,000 Ångstroms. In a number of cases, they’re confirmed by observations at longer infrared wavelengths, either with NICMOS [the Near Infrared Camera and Multi-Object Spectrometer on Hubble] or with IRAC [the Infrared Camera Array] on Spitzer.

We’re pretty sure those objects are also at redshifts 6 or greater. [That’s about 12.7 billion light-years away. Thought of in another way, we’re looking at these galaxies as they were less than 950 million years after the Big Bang.]

But then the question is: “Is this the population of objects that caused the reionization of the universe after the Big Bang, after the Dark Ages?”

That is a controversial issue. The ultraviolet radiation that must reionize the universe is essentially absorbed by all the stuff between us and the distant objects. Ultraviolet light just doesn’t penetrate very far. So, taking that population of objects that is observed at wavelengths too long to ionize hydrogen, scientists have to extrapolate their observations to estimate the amount of ionizing radiation. They have to ask, for example, based on the [infrared and visible] light we see, “Can we … figure out if the [ultraviolet] light that we don’t see was enough to reionize the universe?”

Unfortunately, the uncertainties in that extrapolation are such that you can get either answer. Two research groups have said, “Yes, there is clearly enough radiation to ionize the universe, so we have seen the first galaxies.” And one group says, “No, we use different assumptions and don’t agree.”

B: I think that deep infrared observations to see if there’s another population we don’t see could settle the question in the future; if no such population is discovered, I think that will settle it. But those observations are not immediately on the horizon, because it will probably take the Wide Field Camera 3 on Hubble and a servicing mission [to install it] to actually do the job right.

Spitzer might provide us with some of the answers soon. I don’t know if it’ll have enough spatial resolution to see HUDF galaxies uniquely, but it certainly is a very sensitive infrared telescope.

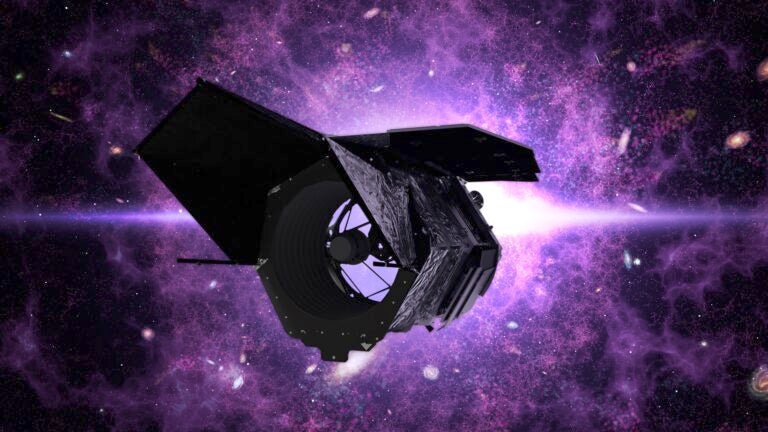

[The James] Webb [Space Telescope] is designed to answer this question unequivocally. There’s no doubt that any uncertainties left by Hubble, even if we have another servicing mission, will be answered by Webb. But Webb’s a few years away — we certainly would love to have the answer before we get to Webb.

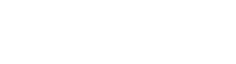

B: I think a lot of it was unexpected. If you look carefully at the Deep Field — you have to enlarge it on a computer screen — the galaxies become … I guess the right word is “blobbier.” They’re no longer the beautiful spirals or ellipticals we see nearby. The Deep Field was the first image where you could see that the universe looked different at an earlier epoch.

— Steven Beckwith

There was enough blurring in ground-based images [prior to the HDF] that you really couldn’t tell if galaxies were getting substantially smaller or changing shape, even at very, very high redshifts. Ground-based images just didn’t have the resolution. For that reason, a number of people predicted before the Hubble Deep Field was taken that it wouldn’t be very interesting. They said that galaxies are diffuse and deeper images will not show any more detail.

Well, that prediction turned out to be dead wrong. As you go deeper, galaxies are not more diffuse — in fact, they’re more compact and they’re broken up, so both the changes in structure and size are interesting. And a typical galaxy at high redshift is smaller than 200 milliseconds of arc, which all astronomers know is below the seeing limit of a good modern site. With Hubble, of course, 200 milliseconds of arc is easily resolvable — several resolution elements across.

It turned out that Hubble is just ideal for imaging the most distant galaxies. It hits a beautiful sweet spot for this problem. And the Ultra Deep Field confirmed that impression very well. As you look back farther in time, you see galaxies becoming even smaller and more blobby, if you like, because you’re looking at early pieces that will be assembled into the regular structures we see today.

B: Yes, servicing mission SM3B was absolutely essential to our ability to carry out the Ultra Deep Field. [In March 2002, astronauts installed the Advanced Camera for Surveys (ACS) and added a new, experimental cooling system to NICMOS, which allowed it to return to active duty.]

At these faint sensitivities [in the HUDF], we’re looking at redshifts that shift the light from objects beyond the longest wavelength band in the Hubble Deep Field. We wouldn’t have seen any of most distant HUDF objects in the Hubble Deep Field, because they would have been redshifted beyond the I-band filter. The new Advanced Camera for Surveys was an essential step forward to do this research.

NICMOS works out to 2.5 microns on Hubble, and at the longest wavelengths, we could see objects to a redshift of 12 [less than 400 million years after the Big Bang]. At about a redshift of 7, ACS becomes useless because the light from objects shifts out of its reddest band. In the initial NICMOS images, Rodger Thompson and his collaborators found several objects at infrared wavelengths beyond 1 micron [that weren’t evident] in the ACS images at shorter wavelengths. So, the initial interpretation was that they were very high redshift objects [beyond redshift 7].

B: Many of those objects were false identifications — probably cosmic-ray particles absorbed by the detectors that weren’t sufficiently removed by the data-reduction process. A careful look at our ACS images showed that we did, in fact, find some of them in the Ultra Deep Field. They were faint, but they weren’t as red as originally thought.

Now, the initial interpretation of the NICMOS results didn’t turn out very well, but it was still a great idea. Thompson’s proposal was a very prescient one, and we would love to have deeper infrared data, even from Hubble. [But, with NICMOS,] we weren’t getting enough sensitivity to pick up the faint red objects. [ACS is sensitive enough to see them, but it doesn’t operate far enough into the infrared. NICMOS sees the right wavelengths, but it isn’t sensitive enough to see such objects.] You could see them with Wide Field Camera 3 and enough telescope time.

With Wide Field Camera 3, we could do a very clean experiment. We could ask if the population of objects we see with ACS is also present at higher redshifts. If it isn’t, it’s almost certain objects in the Ultra Deep Field are the first to emerge after the Big Bang. If the population is present, then the objects at higher redshifts may be responsible for reionization.

It’s absolutely certain that if we have SM4 and put on Wide Field Camera 3 — which has a sensitive infrared array in it — we should be able to solve this problem. We’ll be able to reimage the Hubble Ultra Deep Field and get a tremendous gain in sensitivity.

B: There is a grism on the ACS that allows us to do slitless spectroscopy of the whole field. [A grism is a prism with grooves cut into one surface that allows light to be dispersed into a spectrum.] One of the groups here [at STScI], [headed by] Sangeeta Malhotra, took grism images of the Ultra Deep Field. [She and her colleagues] observed many objects for which they measure the redshifts directly. The most distant one that we can confirm spectroscopically is at a redshift of 6.7. And I think that’s probably the most distant object that has been observed and confirmed directly. [Light from this object has been heading toward us for 12.8 billion years. It departed the young galaxy when the universe was around 820 million years old.]

There are claims for objects out at redshifts close to 10, thanks to fortuitous magnification by gravitational lensing, but these have recently been called into question. The grism spectra of the objects in the Ultra Deep Field are direct measurements.

B: One of them is the high-redshift universe and galaxy formation with Wide Field Camera 3.

— Steven Beckwith

[Another] science topic … is the accelerating universe and the issue of dark energy. Hubble uniquely can find and study supernovae at redshifts greater than 1. Right now, that’s the high-leverage place for understanding the time-expansion history of the universe — you know, the transition between decelerating at the beginning to accelerating more recently.

B: Above [a redshift of] 1, I think 7 or 8. Over the next 2 years, we hope to increase it to about 30. But then, if we had Wide Field Camera 3, which is well-suited to the infrared measurements, and more telescope time, say, another 4 or 5 years, we could increase the number to well over 100.

I think if Hubble were to continue, it would make a significant advance in the dark energy problem. Plus … to hunt for these supernovae, you basically have to map the sky. And when you’re mapping, you pick up great information on galaxy formation, as in the Ultra Deep Field.

The science is very good, very robust with Hubble. It’s not slowing down in any way, and servicing would help continue that progress.

B: We’re down to four gyroscopes out of a full complement of six. We operate with three. We’re trying to get a two-gyro control mode under way, which requires changing the flight software, and we believe we can make the telescope point on two-gyro mode. But, probably, the pointing jitter will be higher, and may affect the images. We don’t know yet.

[Jitter is] our equivalent of seeing on the ground. Over a 1-hour exposure, the telescope moves a little bit. Right now, with three gyroscopes, drift is less the 5 milliseconds of arc. Since the image size for point sources is 50 milliseconds of arc, the current jitter is inconsequential. What we’re worried about with the two-gyro mode is that the jitter may increase to 10- or 20 milliseconds of arc. For wide-field work, this is probably okay, but for high-resolution work, it may be a problem.

The batteries, we think, with care, may last until 2009 or 2010. If the batteries go, the whole satellite basically shuts down, and we don’t think we can recover it with servicing.

The transmitters are old enough that we’re worried about their performance. So, we’ve changed some of the science we do. We no longer take parallel data [that is, taking data on multiple instruments] on every field with all instruments as a matter of routine because it just puts a lot more stress on the transmitters when transmitting the data back down to Earth. We only do parallel observations when they’re required for science. We did an analysis and found that these routine parallels weren’t producing a whole lot of science anyway.

B: It’s obvious it can be done with the shuttle because NASA has done that 4 times. But can it be done with robots?

The people who are working on the robots believe it can be, and they believe it can be done in time. Part of the reason for that belief is they have taken a robot [known as Dextre] and done laboratory demonstrations of all of the [instrument-replacement] tasks. They feel they’ve demonstrated in the lab that servicing is doable with Dextre. The second reason they think it’s doable is that Dextre is already space-qualified. It was built for the space station.

All they need to do is develop tools to go on the end of Dextre’s arm to perform the specialized tasks for Hubble. They develop new tools for every servicing mission anyway: The astronauts are very practiced at using newly developed tools.

[The NASA engineers have] a lot of confidence they could mount a rapid servicing mission to Hubble with robots and make it work. In fact, they believe the most challenging task is not so much the replacement of parts once they dock with Hubble, it’s the actual docking — automatic rendezvous and capture. On the shuttle, you’ve got astronauts sitting there who are manipulating the arm, and watching Hubble, and manipulating the shuttle itself … and this would have to be done by a robot automatically.

B: I think that difficulty can be overcome. There’s worry about the time delay because commands have to be relayed through TDRSS [the Tracking and Data Relay Satellite System]. I think they expect a half-second delay in the best possible case, and a half second turns out to be enough to make real-time manipulation difficult. Have you ever had your computer mouse freeze up and delay for a fraction of a second? It’s like that, I imagine.

Ten or 15 years ago, transatlantic telephone calls would sometimes go through a geosynchronous satellite. You’d say something, and then you’d pause, and you’d wait for a response, and you wouldn’t hear it. So you’d start talking again, but by then, the other person had started talking, and so you’re conversations collided.

Telerobotics is something NASA wants to try, but I think the plan is to have most of the tasks done automatically. Ground controllers could monitor the progress of automatic tasks, and if anything got stuck, an astronaut on the ground could correct it telerobotically. There are a lot of things NASA still has to work out, but I believe those are the general plans.

B: The announcement on January 16, which we refer to around here as “Black Friday,” was pretty devastating for all of us, and I was quite down in the dumps. But we, very quickly, got an overwhelming response from the world. My e-mail traffic went through the roof in the days following the announcement. We got phone calls and letters — just an incredible show of support. Over the months of spring 2004, that support not only didn’t abate, it increased. And that support was tremendously gratifying.

While I was devastated by the decision, within a few days, I decided that we had to fight it. We had to be public advocates for science. At the beginning, I didn’t have a very strong hope that we could affect the outcome, but as the weeks went on, I became increasingly optimistic that NASA was going to have to find a way to service Hubble. There was just too much public support for that part of its program and too little public support for many of the other things NASA was trying to do.

Indeed, by the time the Lanzerotti committee [the National Research Council’s Committee on Assessment of Options for Extending the Life of the Hubble Space Telescope, chaired by Louis J. Lanzerotti of the New Jersey Institute of Technology, convened in May 2004] was meeting, it was clear that [members] were taking a similar point of view — that Hubble was an incredibly valuable facility, perhaps more valuable than many other things that NASA wanted to spend money on, and NASA would have to find a way to keep it viable. I believe Sean O’Keefe was coming to the same conclusion. He probably underestimated the extent to which Hubble represents a positive image of the agency.

Now, I have to tell you that the robotic mission would be exotic and nice for science, but it would still be a diminished mission compared to the space shuttle. We don’t think we’re going to be able to do everything with robots that we would do with astronauts. It’s just harder. At the moment, I believe it would be better to go back with the shuttle. If we’re going to continue flying the shuttle at all, I can’t think of a worthier thing to do with it than to go back to Hubble, so I continue to hold out some hope that NASA might change its decision.

It was a very difficult battle, and it was very clear when O’Keefe made his announcement, it was a personal decision on his part — and my advocacy and that of my colleagues was not going to be greeted warmly by NASA. That was a difficult position to be in, but I think we did the right thing, nevertheless. I’m cautiously optimistic Hubble will be serviced by some means.

Hubble is the original comeback kid, and I think it can come back again.

Beckwith: We absolutely had to get these very deep optical images while it was still possible. And I’m glad we did, because Hubble may not be around long enough to do it otherwise. I think the Ultra Deep Field is going to remain a real legacy for the future … a necessary tool for any analysis that will be done by [the] James Webb [Space Telescope], by Hubble with another infrared camera, or Spitzer, or any new satellite. If you look at it, it’s really quite beautiful.

B: There’s no doubt that we are seeing a population of very red objects that are very distant. You see them only in the longest wavelength, what we call the z filter, at about, 9,000 Ångstroms. In a number of cases, they’re confirmed by observations at longer infrared wavelengths, either with NICMOS [the Near Infrared Camera and Multi-Object Spectrometer on Hubble] or with IRAC [the Infrared Camera Array] on Spitzer.

We’re pretty sure those objects are also at redshifts 6 or greater. [That’s about 12.7 billion light-years away. Thought of in another way, we’re looking at these galaxies as they were less than 950 million years after the Big Bang.]

But then the question is: “Is this the population of objects that caused the reionization of the universe after the Big Bang, after the Dark Ages?”

That is a controversial issue. The ultraviolet radiation that must reionize the universe is essentially absorbed by all the stuff between us and the distant objects. Ultraviolet light just doesn’t penetrate very far. So, taking that population of objects that is observed at wavelengths too long to ionize hydrogen, scientists have to extrapolate their observations to estimate the amount of ionizing radiation. They have to ask, for example, based on the [infrared and visible] light we see, “Can we … figure out if the [ultraviolet] light that we don’t see was enough to reionize the universe?”

Unfortunately, the uncertainties in that extrapolation are such that you can get either answer. Two research groups have said, “Yes, there is clearly enough radiation to ionize the universe, so we have seen the first galaxies.” And one group says, “No, we use different assumptions and don’t agree.”

B: I think that deep infrared observations to see if there’s another population we don’t see could settle the question in the future; if no such population is discovered, I think that will settle it. But those observations are not immediately on the horizon, because it will probably take the Wide Field Camera 3 on Hubble and a servicing mission [to install it] to actually do the job right.

Spitzer might provide us with some of the answers soon. I don’t know if it’ll have enough spatial resolution to see HUDF galaxies uniquely, but it certainly is a very sensitive infrared telescope.

[The James] Webb [Space Telescope] is designed to answer this question unequivocally. There’s no doubt that any uncertainties left by Hubble, even if we have another servicing mission, will be answered by Webb. But Webb’s a few years away — we certainly would love to have the answer before we get to Webb.

B: I think a lot of it was unexpected. If you look carefully at the Deep Field — you have to enlarge it on a computer screen — the galaxies become … I guess the right word is “blobbier.” They’re no longer the beautiful spirals or ellipticals we see nearby. The Deep Field was the first image where you could see that the universe looked different at an earlier epoch.

— Steven Beckwith

There was enough blurring in ground-based images [prior to the HDF] that you really couldn’t tell if galaxies were getting substantially smaller or changing shape, even at very, very high redshifts. Ground-based images just didn’t have the resolution. For that reason, a number of people predicted before the Hubble Deep Field was taken that it wouldn’t be very interesting. They said that galaxies are diffuse and deeper images will not show any more detail.

Well, that prediction turned out to be dead wrong. As you go deeper, galaxies are not more diffuse — in fact, they’re more compact and they’re broken up, so both the changes in structure and size are interesting. And a typical galaxy at high redshift is smaller than 200 milliseconds of arc, which all astronomers know is below the seeing limit of a good modern site. With Hubble, of course, 200 milliseconds of arc is easily resolvable — several resolution elements across.

It turned out that Hubble is just ideal for imaging the most distant galaxies. It hits a beautiful sweet spot for this problem. And the Ultra Deep Field confirmed that impression very well. As you look back farther in time, you see galaxies becoming even smaller and more blobby, if you like, because you’re looking at early pieces that will be assembled into the regular structures we see today.

B: Yes, servicing mission SM3B was absolutely essential to our ability to carry out the Ultra Deep Field. [In March 2002, astronauts installed the Advanced Camera for Surveys (ACS) and added a new, experimental cooling system to NICMOS, which allowed it to return to active duty.]

At these faint sensitivities [in the HUDF], we’re looking at redshifts that shift the light from objects beyond the longest wavelength band in the Hubble Deep Field. We wouldn’t have seen any of most distant HUDF objects in the Hubble Deep Field, because they would have been redshifted beyond the I-band filter. The new Advanced Camera for Surveys was an essential step forward to do this research.

NICMOS works out to 2.5 microns on Hubble, and at the longest wavelengths, we could see objects to a redshift of 12 [less than 400 million years after the Big Bang]. At about a redshift of 7, ACS becomes useless because the light from objects shifts out of its reddest band. In the initial NICMOS images, Rodger Thompson and his collaborators found several objects at infrared wavelengths beyond 1 micron [that weren’t evident] in the ACS images at shorter wavelengths. So, the initial interpretation was that they were very high redshift objects [beyond redshift 7].

B: Many of those objects were false identifications — probably cosmic-ray particles absorbed by the detectors that weren’t sufficiently removed by the data-reduction process. A careful look at our ACS images showed that we did, in fact, find some of them in the Ultra Deep Field. They were faint, but they weren’t as red as originally thought.

Now, the initial interpretation of the NICMOS results didn’t turn out very well, but it was still a great idea. Thompson’s proposal was a very prescient one, and we would love to have deeper infrared data, even from Hubble. [But, with NICMOS,] we weren’t getting enough sensitivity to pick up the faint red objects. [ACS is sensitive enough to see them, but it doesn’t operate far enough into the infrared. NICMOS sees the right wavelengths, but it isn’t sensitive enough to see such objects.] You could see them with Wide Field Camera 3 and enough telescope time.

With Wide Field Camera 3, we could do a very clean experiment. We could ask if the population of objects we see with ACS is also present at higher redshifts. If it isn’t, it’s almost certain objects in the Ultra Deep Field are the first to emerge after the Big Bang. If the population is present, then the objects at higher redshifts may be responsible for reionization.

It’s absolutely certain that if we have SM4 and put on Wide Field Camera 3 — which has a sensitive infrared array in it — we should be able to solve this problem. We’ll be able to reimage the Hubble Ultra Deep Field and get a tremendous gain in sensitivity.

B: There is a grism on the ACS that allows us to do slitless spectroscopy of the whole field. [A grism is a prism with grooves cut into one surface that allows light to be dispersed into a spectrum.] One of the groups here [at STScI], [headed by] Sangeeta Malhotra, took grism images of the Ultra Deep Field. [She and her colleagues] observed many objects for which they measure the redshifts directly. The most distant one that we can confirm spectroscopically is at a redshift of 6.7. And I think that’s probably the most distant object that has been observed and confirmed directly. [Light from this object has been heading toward us for 12.8 billion years. It departed the young galaxy when the universe was around 820 million years old.]

There are claims for objects out at redshifts close to 10, thanks to fortuitous magnification by gravitational lensing, but these have recently been called into question. The grism spectra of the objects in the Ultra Deep Field are direct measurements.

B: One of them is the high-redshift universe and galaxy formation with Wide Field Camera 3.

— Steven Beckwith

[Another] science topic … is the accelerating universe and the issue of dark energy. Hubble uniquely can find and study supernovae at redshifts greater than 1. Right now, that’s the high-leverage place for understanding the time-expansion history of the universe — you know, the transition between decelerating at the beginning to accelerating more recently.

B: Above [a redshift of] 1, I think 7 or 8. Over the next 2 years, we hope to increase it to about 30. But then, if we had Wide Field Camera 3, which is well-suited to the infrared measurements, and more telescope time, say, another 4 or 5 years, we could increase the number to well over 100.

I think if Hubble were to continue, it would make a significant advance in the dark energy problem. Plus … to hunt for these supernovae, you basically have to map the sky. And when you’re mapping, you pick up great information on galaxy formation, as in the Ultra Deep Field.

The science is very good, very robust with Hubble. It’s not slowing down in any way, and servicing would help continue that progress.

B: We’re down to four gyroscopes out of a full complement of six. We operate with three. We’re trying to get a two-gyro control mode under way, which requires changing the flight software, and we believe we can make the telescope point on two-gyro mode. But, probably, the pointing jitter will be higher, and may affect the images. We don’t know yet.

[Jitter is] our equivalent of seeing on the ground. Over a 1-hour exposure, the telescope moves a little bit. Right now, with three gyroscopes, drift is less the 5 milliseconds of arc. Since the image size for point sources is 50 milliseconds of arc, the current jitter is inconsequential. What we’re worried about with the two-gyro mode is that the jitter may increase to 10- or 20 milliseconds of arc. For wide-field work, this is probably okay, but for high-resolution work, it may be a problem.

The batteries, we think, with care, may last until 2009 or 2010. If the batteries go, the whole satellite basically shuts down, and we don’t think we can recover it with servicing.

The transmitters are old enough that we’re worried about their performance. So, we’ve changed some of the science we do. We no longer take parallel data [that is, taking data on multiple instruments] on every field with all instruments as a matter of routine because it just puts a lot more stress on the transmitters when transmitting the data back down to Earth. We only do parallel observations when they’re required for science. We did an analysis and found that these routine parallels weren’t producing a whole lot of science anyway.

B: It’s obvious it can be done with the shuttle because NASA has done that 4 times. But can it be done with robots?

The people who are working on the robots believe it can be, and they believe it can be done in time. Part of the reason for that belief is they have taken a robot [known as Dextre] and done laboratory demonstrations of all of the [instrument-replacement] tasks. They feel they’ve demonstrated in the lab that servicing is doable with Dextre. The second reason they think it’s doable is that Dextre is already space-qualified. It was built for the space station.

All they need to do is develop tools to go on the end of Dextre’s arm to perform the specialized tasks for Hubble. They develop new tools for every servicing mission anyway: The astronauts are very practiced at using newly developed tools.

[The NASA engineers have] a lot of confidence they could mount a rapid servicing mission to Hubble with robots and make it work. In fact, they believe the most challenging task is not so much the replacement of parts once they dock with Hubble, it’s the actual docking — automatic rendezvous and capture. On the shuttle, you’ve got astronauts sitting there who are manipulating the arm, and watching Hubble, and manipulating the shuttle itself … and this would have to be done by a robot automatically.

B: I think that difficulty can be overcome. There’s worry about the time delay because commands have to be relayed through TDRSS [the Tracking and Data Relay Satellite System]. I think they expect a half-second delay in the best possible case, and a half second turns out to be enough to make real-time manipulation difficult. Have you ever had your computer mouse freeze up and delay for a fraction of a second? It’s like that, I imagine.

Ten or 15 years ago, transatlantic telephone calls would sometimes go through a geosynchronous satellite. You’d say something, and then you’d pause, and you’d wait for a response, and you wouldn’t hear it. So you’d start talking again, but by then, the other person had started talking, and so you’re conversations collided.

Telerobotics is something NASA wants to try, but I think the plan is to have most of the tasks done automatically. Ground controllers could monitor the progress of automatic tasks, and if anything got stuck, an astronaut on the ground could correct it telerobotically. There are a lot of things NASA still has to work out, but I believe those are the general plans.

B: The announcement on January 16, which we refer to around here as “Black Friday,” was pretty devastating for all of us, and I was quite down in the dumps. But we, very quickly, got an overwhelming response from the world. My e-mail traffic went through the roof in the days following the announcement. We got phone calls and letters — just an incredible show of support. Over the months of spring 2004, that support not only didn’t abate, it increased. And that support was tremendously gratifying.

While I was devastated by the decision, within a few days, I decided that we had to fight it. We had to be public advocates for science. At the beginning, I didn’t have a very strong hope that we could affect the outcome, but as the weeks went on, I became increasingly optimistic that NASA was going to have to find a way to service Hubble. There was just too much public support for that part of its program and too little public support for many of the other things NASA was trying to do.

Indeed, by the time the Lanzerotti committee [the National Research Council’s Committee on Assessment of Options for Extending the Life of the Hubble Space Telescope, chaired by Louis J. Lanzerotti of the New Jersey Institute of Technology, convened in May 2004] was meeting, it was clear that [members] were taking a similar point of view — that Hubble was an incredibly valuable facility, perhaps more valuable than many other things that NASA wanted to spend money on, and NASA would have to find a way to keep it viable. I believe Sean O’Keefe was coming to the same conclusion. He probably underestimated the extent to which Hubble represents a positive image of the agency.

Now, I have to tell you that the robotic mission would be exotic and nice for science, but it would still be a diminished mission compared to the space shuttle. We don’t think we’re going to be able to do everything with robots that we would do with astronauts. It’s just harder. At the moment, I believe it would be better to go back with the shuttle. If we’re going to continue flying the shuttle at all, I can’t think of a worthier thing to do with it than to go back to Hubble, so I continue to hold out some hope that NASA might change its decision.

It was a very difficult battle, and it was very clear when O’Keefe made his announcement, it was a personal decision on his part — and my advocacy and that of my colleagues was not going to be greeted warmly by NASA. That was a difficult position to be in, but I think we did the right thing, nevertheless. I’m cautiously optimistic Hubble will be serviced by some means.

Hubble is the original comeback kid, and I think it can come back again.