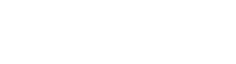

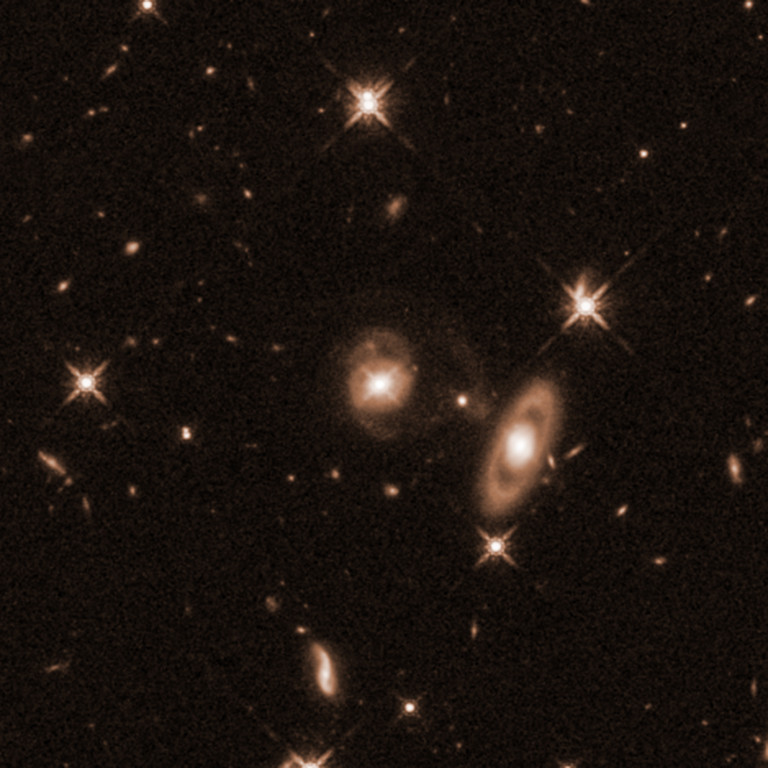

The distribution of galaxies on large scales encodes valuable information about the origin and fate of the universe. To study this, BOSS, a branch of the Sloan Digital Sky Survey (SDSS-III), has measured the redshift distribution of galaxies with unprecedented accuracy. One important question arises in the analysis of the data provided by such surveys: If the universe is comparable to a huge unique experiment, how can we determine the uncertainties in the measurement of quantities derived from observing it?

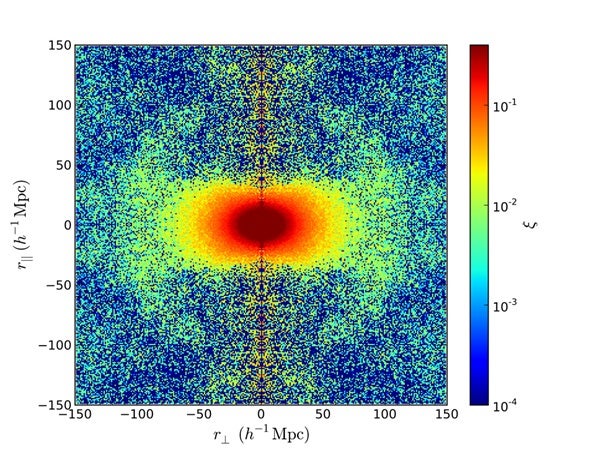

While common experiments can be repeated an arbitrary number of times in the laboratory, the cosmic universe is only reproducible in super-computing facilities. One needs to consider the statistical fluctuations caused by the so-called cosmic variance, having its origin in the primordial seed fluctuations. However, reconstructing the large-scale structure covering the volumes of a survey like BOSS from the fluctuations generated after the Big Bang until the formation of the observed galaxies after about 14 billion years of cosmic evolution is an extremely expensive task, requiring millions of super-computing hours.

Francisco Kitaura from the Leibniz Institute for Astrophysics Potsdam (AIP) states: “We have developed the necessary techniques to generate thousands of simulated galaxy catalogs, reproducing the statistical properties of the observations.”

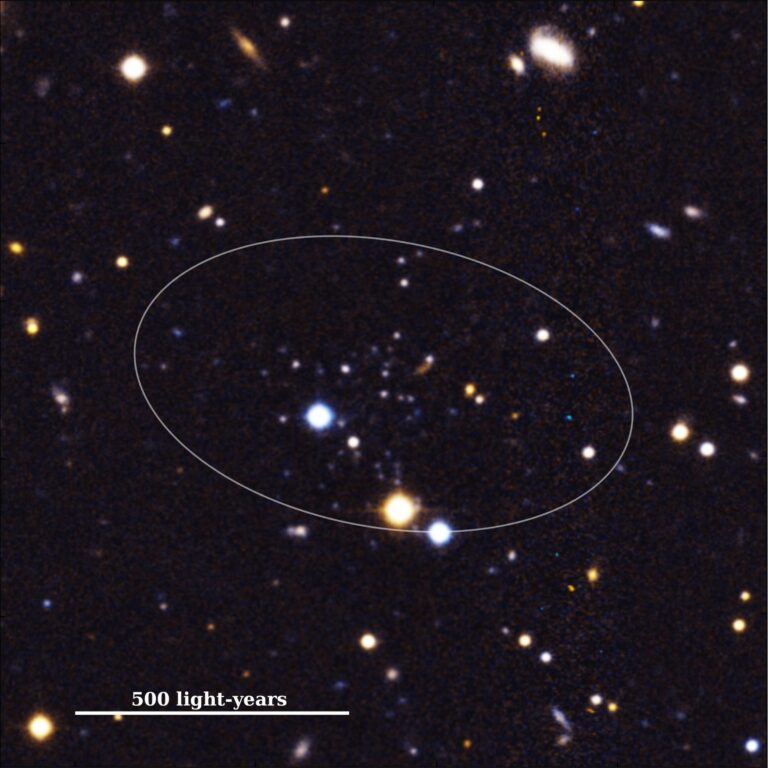

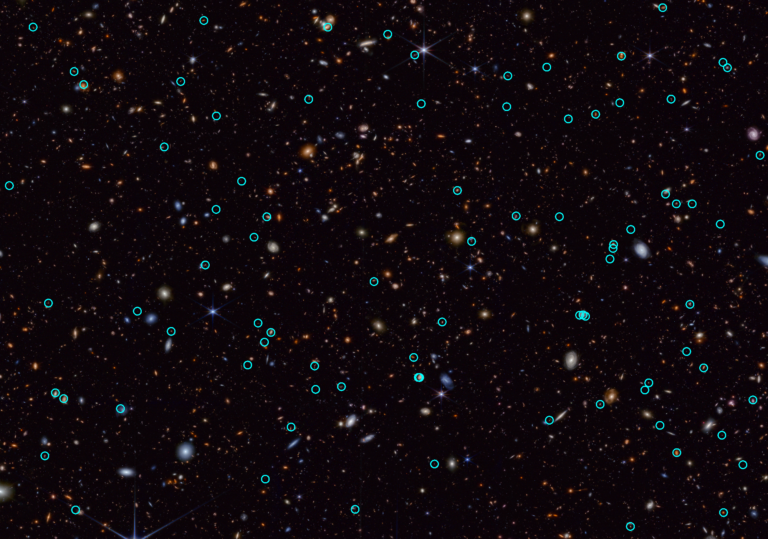

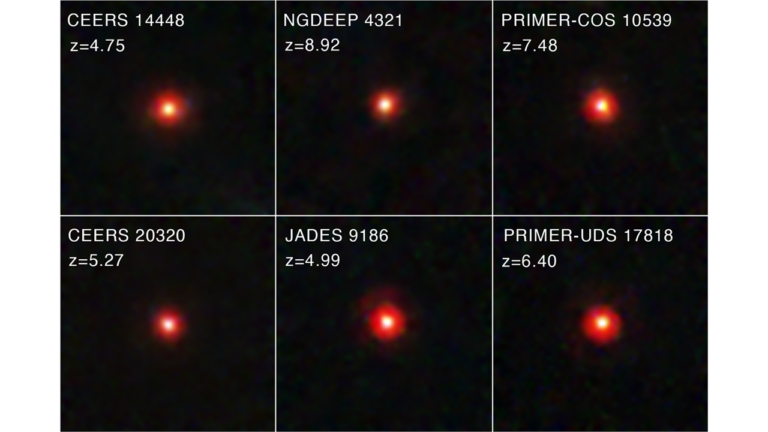

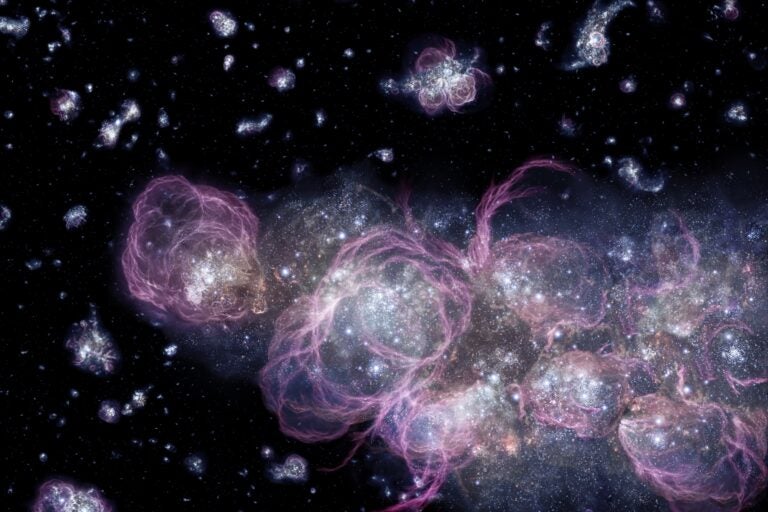

The production of the catalogs followed three steps: first, thousands of dark matter fields were generated with different seed perturbations at different cosmic epochs. Second, the galaxies were distributed in a nonlinear, stochastic way matching the statistical properties of the observations. Third, the mass of each galaxy — determined by its environment — was reconstructed. Finally, the catalogs of different cosmic times were combined into light cones reproducing the observational properties of the BOSS data, such as the survey geometry, and galaxy number density at different distances and look-back times.

Chia-Hsun Chuang from the AIP explained: “With this novel approach, we are able to reliably constrain the errors to the cosmological parameters we extract from the data.”

“The MareNostrum super-computing facility at the Barcelona Supercomputing Center was used to produce the largest number of synthetic galaxy catalogs to date covering a volume more than ten times larger than the sum of all the large volume simulations carried out so far,” said Gustavo Yepes from the Autonomous University of Madrid.

The statistical properties extracted from the data and compared to the models have helped gain new insights: “Now we understand better the relation between the galaxy distribution and the underlying large-scale dark matter field. We will continue refining our methods to further understand the structures we observe in the universe,” concluded Kitaura.