In 1893, English astronomer Edward Walter Maunder brought something to the world’s attention: Between 1645 and 1714, astronomers reported almost no sunspot activity. This solar funk, which has not been repeated, came to be called the “Maunder minimum,” and it coincided with the coldest part of the Little Ice Age in Europe and North America. That period of global cooling started around 1300 and lasted several centuries.

Maunder also noticed a dip in the frequency and intensity of the northern lights — which are caused by solar storms — during that same period. Because stars are dimmer when they are inactive, it’s reasonable to draw a correlation between the drop in activity on the Sun and the drop in temperature on Earth.

In 1976, when astronomers made this connection, they began searching nearby Sun-like stars for other examples of stellar minima. They were hoping to understand what causes these inactive periods and perhaps predict the next such period of our own Sun — and with it, the next period of global cooling.

But new research by University of California, Berkeley, astronomers has cast doubt on the status of the hundreds of stars that have been classified as Maunder minima. In a poster presented at this month’s meeting of the American Astronomical Society, Jason Wright and Geoffrey Marcy presented a study showing that 1,200 supposedly Sun-like stars displaying minimal activity are actually quite different from our own Sun — and therefore cannot be Maunder minima.

“Star surveys typically find that 10 to 15 percent of all Sun-like stars are in an inactive state like the Maunder minimum, which would indicate that the Sun spends about 10 percent of its time in this state,” Wright said. “But our study shows that the vast majority of stars identified as Maunder minimum stars are … not Sun-like at all, but are either evolved stars or stars rich in metals like iron and nickel.”

In fact, they haven’t found a single star that is unquestionably a Maunder minimum.

“We thought we knew how to detect Maunder minimum stars, but we don’t,” Wright said.

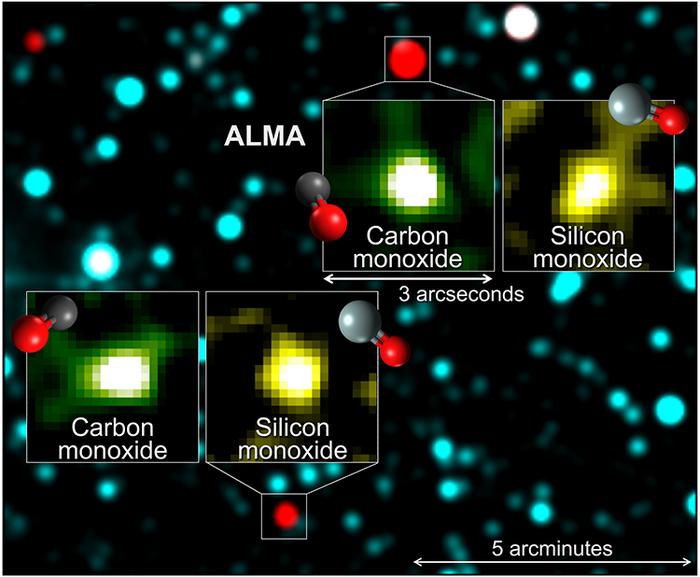

The previous method was for astronomers to measure the emission of calcium in a star’s atmosphere. When our own Sun is active, its upper atmosphere reaches temperatures of some 10,000 K, causing calcium to emit a blue light. A decrease in the calcium emission of a Sun-like star, therefore, should be evidence of a star in a period of inactivity.

But Wright has found that the calcium-emission decreases in stars classified as Maunder minima have other, more likely causes, such as a higher metal content. These stars burn more brightly than our own Sun but also exhibit less activity. Another alternative is age — as stars get older, they spin more slowly, produce less powerful magnetic fields, and lose their spots.

“What astronomers have assumed is that Sun-like stars going through a stellar funk are actually very, very old stars whose magnetic fields have turned off forever,” Marcy said. “They are not in a temporary Maunder minimum, but a permanent one. They’re dead.”

This problem is being discovered only now because it wasn’t until 1998 that the Hipparcos satellite was launched, allowing scientists to determine the precise distances to nearby stars. Once the distances were nailed down, it became possible to calculate the stars’ absolute brightnesses, and that’s where the inconsistencies with the Maunder minimum theory began to show.

“We still don’t understand what’s going on in our Sun, how magnetic fields generate the 11-year solar cycle, or what caused the magnetic Maunder minimum,” Marcy said. “In particular, we don’t know how often a Sun-like star falls into a Maunder minimum, or when the next minimum will occur. It could be tomorrow.”